Theoretical Proof: CSA Module Maintains MIL Properties

Table of Links

Abstract and 1 Introduction

-

Related Work

2.1. Multimodal Learning

2.2. Multiple Instance Learning

-

Methodology

3.1. Preliminaries and Notations

3.2. Relations between Attention-based VPG and MIL

3.3. MIVPG for Multiple Visual Inputs

3.4. Unveiling Instance Correlation in MIVPG for Enhanced Multi-instance Scenarios

-

Experiments and 4.1. General Setup

4.2. Scenario 1: Samples with Single Image

4.3. Scenario 2: Samples with Multiple Images, with Each Image as a General Embedding

4.4. Scenario 3: Samples with Multiple Images, with Each Image Having Multiple Patches to be Considered and 4.5. Case Study

-

Conclusion and References

\ Supplementary Material

A. Detailed Architecture of QFormer

B. Proof of Proposition

C. More Experiments

B. Proof of Proposition

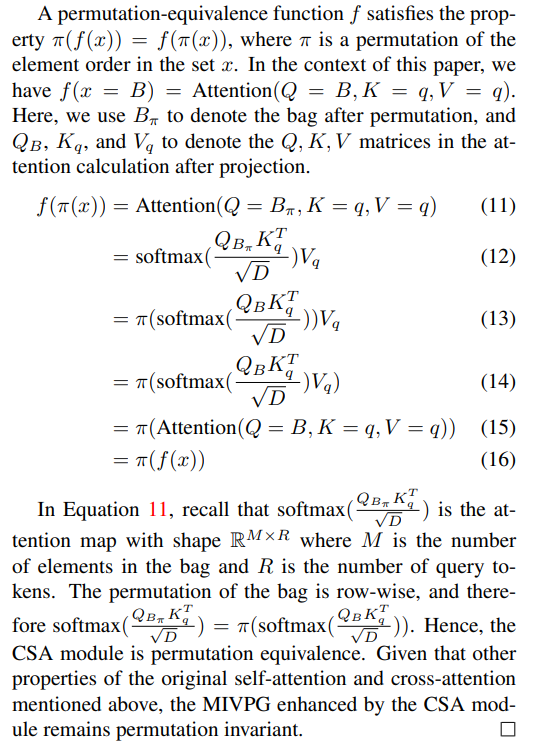

In Proposition 2, we illustrate that MIVPG, when augmented with the CSA (Correlated Self-Attention) module, maintains the crucial permutation invariance property of MIL. In this section, we provide a theoretical demonstration of this property.

\ Proof. Recall that both the original cross-attention and self-attention mechanisms have already demonstrated permutation equivalence for the visual inputs (Property 1 in [19] and Proposition 1 in the main paper). Our objective is to establish that the CSA module also maintains this permutation equivalence, ensuring that the final query embeddings exhibit permutation invariance.

\

\

:::info Authors:

(1) Wenliang Zhong, The University of Texas at Arlington (wxz9204@mavs.uta.edu);

(2) Wenyi Wu, Amazon (wenyiwu@amazon.com);

(3) Qi Li, Amazon (qlimz@amazon.com);

(4) Rob Barton, Amazon (rab@amazon.com);

(5) Boxin Du, Amazon (boxin@amazon.com);

(6) Shioulin Sam, Amazon (shioulin@amazon.com);

(7) Karim Bouyarmane, Amazon (bouykari@amazon.com);

(8) Ismail Tutar, Amazon (ismailt@amazon.com);

(9) Junzhou Huang, The University of Texas at Arlington (jzhuang@uta.edu).

:::

:::info This paper is available on arxiv under CC by 4.0 Deed (Attribution 4.0 International) license.

:::

\

You May Also Like

Unleashing A New Era Of Seller Empowerment

WIF Price Prediction: Targets $0.46 Breakout by February 2026