Advancing VLN: O3D-SIM's Role in Human-Robot Collaboration and Simulation

Table of Links

Abstract and 1 Introduction

-

Related Works

2.1. Vision-and-Language Navigation

2.2. Semantic Scene Understanding and Instance Segmentation

2.3. 3D Scene Reconstruction

-

Methodology

3.1. Data Collection

3.2. Open-set Semantic Information from Images

3.3. Creating the Open-set 3D Representation

3.4. Language-Guided Navigation

-

Experiments

4.1. Quantitative Evaluation

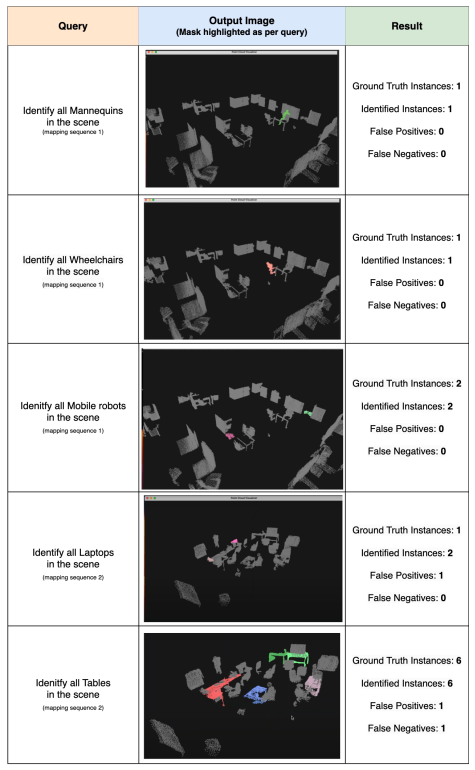

4.2. Qualitative Results

-

Conclusion and Future Work, Disclosure statement, and References

5. Conclusion and Future Work

In conclusion, we introduce O3D-SIM, an innovative approach to scene representation that builds on the concepts established in our previous work, SI-Maps. We demonstrated that an instance-level understanding of an environment enhances performance in language-guided tasks. O3D-SIM advances the 2D closed-set approach of SI-Maps to an open-set 3D representation. When combined with a Large Language Model (LLM) to incorporate natural language understanding, O3D-SIM significantly improves the success rate of executing natural language queries. This advancement suggests that representations akin to O3D-SIM could enable robots to approach the comprehension and execution of natural language commands nearly as effectively as humans.

\ A promising avenue for future development involves integrating dynamic objects into O3D-SIM. Although it currently identifies static objects, equipping the pipeline to recognize humans and other dynamic entities would enable handling more complex queries, bringing them closer to human levels of understanding. Such an enhancement would be invaluable in various settings, including hospitals and household robotics, or any context where human-robot collaboration is crucial. Another intriguing avenue for exploration is the integration of 3D instance-level scene representations, such as O3DSIM, with physics engines to transform real-life environments into life-like simulations, akin to Nvidia Isaac Gym [40]. Given O3D-SIM’s capability to distinguish between objects at an instance level, these simulations could be dynamically modified as needed without the necessity of re-recording the entire scene. This flexibility allows for creating varied iterations of the same life-like environment.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

[1] Laksh Nanwani, Anmol Agarwal, Kanishk Jain, Raghav Prabhakar, Aaron Monis, Aditya Mathur, Krishna Murthy Jatavallabhula, A. H. Abdul Hafez, Vineet Gandhi, and K. Madhava Krishna. Instance-level semantic maps for vision language navigation. In 2023 32nd IEEE International Conference on Robot and Human Interactive Communication (RO-MAN). IEEE, August 2023.

\ [2] Chenguang Huang, Oier Mees, Andy Zeng, and Wolfram Burgard. Visual language maps for robot navigation. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), London, UK, 2023.

\ [3] Sang-Min Park and Young-Gab Kim. Visual language navigation: A survey and open challenges. Artificial Intelligence Review, 56(1):365–427, 2023.

\ [4] Qiao Gu, Alihusein Kuwajerwala, Sacha Morin, Krishna Murthy Jatavallabhula, Bipasha Sen, Aditya Agarwal, Corban Rivera, William Paul, Kirsty Ellis, Rama Chellappa, Chuang Gan, Celso Miguel de Melo, Joshua B. Tenenbaum, Antonio Torralba, Florian Shkurti, and Liam Paull. Conceptgraphs: Open-vocabulary 3d scene graphs for perception and planning. arXiv, 2023.

\

\ [5] Julio A Placed, Jared Strader, Henry Carrillo, Nikolay Atanasov, Vadim Indelman, Luca Carlone, and Jos´e A Castellanos. A survey on active simultaneous localization and mapping: State of the art and new frontiers. IEEE Transactions on Robotics, 2023.

\ [6] Boyuan Chen, Fei Xia, Brian Ichter, Kanishka Rao, Keerthana Gopalakrishnan, Michael S. Ryoo, Austin Stone, and Daniel Kappler. Open-vocabulary queryable scene representations for real world planning. In arXiv preprint arXiv:2209.09874, 2022.

\ [7] Bowen Cheng, Ishan Misra, Alexander G. Schwing, Alexander Kirillov, and Rohit Girdhar. Masked-attention mask transformer for universal image segmentation. arXiv, 2021.

\ [8] Kaiming He, Georgia Gkioxari, Piotr Dollar, and Ross Girshick. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Oct 2017.

\ [9] Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, et al. Learning transferable visual models from natural language supervision. In International conference on machine learning, pages 8748–8763. PMLR, 2021.

\ [10] Maxime Oquab, Timoth´ee Darcet, Th´eo Moutakanni, Huy V. Vo, Marc Szafraniec, Vasil Khalidov, Pierre Fernandez, Daniel HAZIZA, Francisco Massa, Alaaeldin El-Nouby, Mido Assran, Nicolas Ballas, Wojciech Galuba, Russell Howes, Po-Yao Huang, Shang-Wen Li, Ishan Misra, Michael Rabbat, Vasu Sharma, Gabriel Synnaeve, Hu Xu, Herve Jegou, Julien Mairal, Patrick Labatut, Armand Joulin, and Piotr Bojanowski. DINOv2: Learning robust visual features without supervision. Transactions on Machine Learning Research, 2024.

\ [11] Matthew Chang, Theophile Gervet, Mukul Khanna, Sriram Yenamandra, Dhruv Shah, So Yeon Min, Kavit Shah, Chris Paxton, Saurabh Gupta, Dhruv Batra, Roozbeh Mottaghi, Jitendra Malik, and Devendra Singh Chaplot. Goat: Go to any thing, 2023.

\ [12] Peter Anderson, Qi Wu, Damien Teney, Jake Bruce, Mark Johnson, Niko S¨underhauf, Ian Reid, Stephen Gould, and Anton van den Hengel. Vision-andlanguage navigation: Interpreting visually-grounded navigation instructions in real environments. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2018.

\ [13] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz Kaiser, and Illia Polosukhin. Attention is all you need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, NIPS’17, page 6000–6010, Red Hook, NY, USA, 2017. Curran Associates Inc.

\ [14] Weituo Hao, Chunyuan Li, Xiujun Li, Lawrence Carin, and Jianfeng Gao. Towards learning a generic agent for vision-and-language navigation via pre-training. In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 13134–13143, 2020.

\ [15] Y. Qiao, Y. Qi, Y. Hong, Z. Yu, P. Wang, and Q. Wu. Hop: History-andorder aware pretraining for vision-and-language navigation. In 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 15397– 15406, Los Alamitos, CA, USA, jun 2022. IEEE Computer Society.

\ [16] Minyoung Hwang, Jaeyeon Jeong, Minsoo Kim, Yoonseon Oh, and Songhwai Oh. Meta-explore: Exploratory hierarchical vision-and-language navigation using scene object spectrum grounding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023.

\ [17] Nandiraju Gireesh, Ayush Agrawal, Ahana Datta, Snehasis Banerjee, Mohan Sridharan, Brojeshwar Bhowmick, and Madhava Krishna. Sequence-agnostic multi-object navigation. In 2023 IEEE International Conference on Robotics and Automation (ICRA), pages 9573–9579, 2023.

\ [18] Margarita Grinvald, Fadri Furrer, Tonci Novkovic, Jen Jen Chung, Cesar Cadena, Roland Siegwart, and Juan Nieto. Volumetric instance-aware semantic mapping and 3d object discovery. IEEE Robotics and Automation Letters, 4(3):3037–3044, 2019.

\ [19] Ruben Mascaro, Lucas Teixeira, and Margarita Chli. Volumetric instance-level semantic mapping via multi-view 2d-to-3d label diffusion. IEEE Robotics and Automation Letters, 7(2):3531–3538, 2022.

\ [20] Yang Miao, Iro Armeni, Marc Pollefeys, and Daniel Barath. Volumetric semantically consistent 3d panoptic mapping. arXiv preprint arXiv:2309.14737, 2023.

\ [21] Alexander Kirillov, Eric Mintun, Nikhila Ravi, Hanzi Mao, Chloe Rolland, Laura Gustafson, Tete Xiao, Spencer Whitehead, Alexander C. Berg, Wan-Yen Lo, Piotr Doll´ar, and Ross Girshick. Segment anything, 2023.

\ [22] Kaiming He, Georgia Gkioxari, Piotr Doll´ar, and Ross Girshick. Mask r-cnn. In 2017 IEEE International Conference on Computer Vision (ICCV), pages 2980– 2988, 2017.

\ [23] Alexander Kirillov, Kaiming He, Ross Girshick, Carsten Rother, and Piotr Doll´ar. Panoptic segmentation, 2019.

\ [24] Ay¸ca Takmaz, Elisabetta Fedele, Robert W. Sumner, Marc Pollefeys, Federico Tombari, and Francis Engelmann. Openmask3d: Open-vocabulary 3d instance segmentation. In Advances in Neural Information Processing Systems (NeurIPS), 2023.

\ [25] Shilong Liu, Zhaoyang Zeng, Tianhe Ren, Feng Li, Hao Zhang, Jie Yang, Chunyuan Li, Jianwei Yang, Hang Su, Jun Zhu, and Lei Zhang. Grounding dino: Marrying dino with grounded pre-training for open-set object detection. arXiv, 2023.

\ [26] Junwen Huang, Alexey Artemov, Yujin Chen, Shuaifeng Zhi, Kai Xu, and Matthias Nießner. Ssr-2d: Semantic 3d scene reconstruction from 2d images. arXiv preprint arXiv:2302.03640, 2023.

\ [27] Quentin Picard, Stephane Chevobbe, Mehdi Darouich, and Jean-Yves Didier. A survey on real-time 3d scene reconstruction with slam methods in embedded systems, 2023.

\ [28] Ben Mildenhall, Pratul P Srinivasan, Matthew Tancik, Jonathan T Barron, Ravi Ramamoorthi, and Ren Ng. Nerf: Representing scenes as neural radiance fields for view synthesis. Communications of the ACM, 65(1):99–106, 2021.

\ [29] Krishna Murthy Jatavallabhula, Alihusein Kuwajerwala, Qiao Gu, Mohd Omama, Tao Chen, Shuang Li, Ganesh Iyer, Soroush Saryazdi, Nikhil Keetha, Ayush Tewari, Joshua B. Tenenbaum, Celso Miguel de Melo, Madhava Krishna, Liam Paull, Florian Shkurti, and Antonio Torralba. Conceptfusion: Open-set multimodal 3d mapping. Robotics: Science and Systems (RSS), 2023.

\ [30] Mathieu Labb´e and Fran¸cois Michaud. Rtab-map as an open-source lidar and visual simultaneous localization and mapping library for large-scale and longterm online operation. Journal of field robotics, 36(2):416–446, 2019.

\ [31] Rainer K¨ummerle, Giorgio Grisetti, Hauke Strasdat, Kurt Konolige, and Wolfram Burgard. G2o: A general framework for graph optimization. In 2011 IEEE International Conference on Robotics and Automation, pages 3607–3613, 2011.

\ [32] Tianhe Ren, Shilong Liu, Ailing Zeng, Jing Lin, Kunchang Li, He Cao, Jiayu Chen, Xinyu Huang, Yukang Chen, Feng Yan, Zhaoyang Zeng, Hao Zhang, Feng Li, Jie Yang, Hongyang Li, Qing Jiang, and Lei Zhang. Grounded SAM: Assembling open-world models for diverse visual tasks. arXiv, 2024.

\ [33] Youcai Zhang, Xinyu Huang, Jinyu Ma, Zhaoyang Li, Zhaochuan Luo, Yanchun Xie, Yuzhuo Qin, Tong Luo, Yaqian Li, Shilong Liu, Yandong Guo, and Lei Zhang. Recognize anything: A strong image tagging model. arXiv, 2023.

\ [34] Martin Ester, Hans-Peter Kriegel, J¨org Sander, Xiaowei Xu, et al. A densitybased algorithm for discovering clusters in large spatial databases with noise. In kdd, volume 96, pages 226–231, 1996.

\ [35] OpenAI. Chatgpt. https://openai.com/blog/chatgpt.

\ [36] Angel Chang, Angela Dai, Thomas Funkhouser, Maciej Halber, Matthias Niessner, Manolis Savva, Shuran Song, Andy Zeng, and Yinda Zhang. Matterport3d: Learning from rgb-d data in indoor environments. arXiv, 2017.

\ [37] Manolis Savva, Abhishek Kadian, Oleksandr Maksymets, Yili Zhao, Erik Wijmans, Bhavana Jain, Julian Straub, Jia Liu, Vladlen Koltun, Jitendra Malik, et al. Habitat: A platform for embodied ai research. In Proceedings of the IEEE/CVF international conference on computer vision, pages 9339–9347, 2019.

\ [38] Raphael Schumann and Stefan Riezler. Analyzing generalization of vision and language navigation to unseen outdoor areas. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 7519–7532, Dublin, Ireland, May 2022. Association for Computational Linguistics.

\ [39] Kanishk Jain, Varun Chhangani, Amogh Tiwari, K. Madhava Krishna, and Vineet Gandhi. Ground then navigate: Language-guided navigation in dynamic scenes. In 2023 IEEE International Conference on Robotics and Automation (ICRA), pages 4113–4120, 2023.

\ [40] Viktor Makoviychuk, Lukasz Wawrzyniak, Yunrong Guo, Michelle Lu, Kier Storey, Miles Macklin, David Hoeller, Nikita Rudin, Arthur Allshire, Ankur Handa, et al. Isaac gym: High performance gpu-based physics simulation for robot learning. arXiv preprint, 2021.

\

:::info Authors:

(1) Laksh Nanwani, International Institute of Information Technology, Hyderabad, India; this author contributed equally to this work;

(2) Kumaraditya Gupta, International Institute of Information Technology, Hyderabad, India;

(3) Aditya Mathur, International Institute of Information Technology, Hyderabad, India; this author contributed equally to this work;

(4) Swayam Agrawal, International Institute of Information Technology, Hyderabad, India;

(5) A.H. Abdul Hafez, Hasan Kalyoncu University, Sahinbey, Gaziantep, Turkey;

(6) K. Madhava Krishna, International Institute of Information Technology, Hyderabad, India.

:::

:::info This paper is available on arxiv under CC by-SA 4.0 Deed (Attribution-Sharealike 4.0 International) license.

:::

\

You May Also Like

USD/INR opens flat on hopes of RBI’s follow-through intervention

U.S. Spot ETFs for DOGE & XRP Unlock New Access