Video Instance Matting: Comparing Temporal Consistency and Detail Preservation

Table of Links

Abstract and 1. Introduction

-

Related Works

-

MaGGIe

3.1. Efficient Masked Guided Instance Matting

3.2. Feature-Matte Temporal Consistency

-

Instance Matting Datasets

4.1. Image Instance Matting and 4.2. Video Instance Matting

-

Experiments

5.1. Pre-training on image data

5.2. Training on video data

-

Discussion and References

\ Supplementary Material

-

Architecture details

-

Image matting

8.1. Dataset generation and preparation

8.2. Training details

8.3. Quantitative details

8.4. More qualitative results on natural images

-

Video matting

9.1. Dataset generation

9.2. Training details

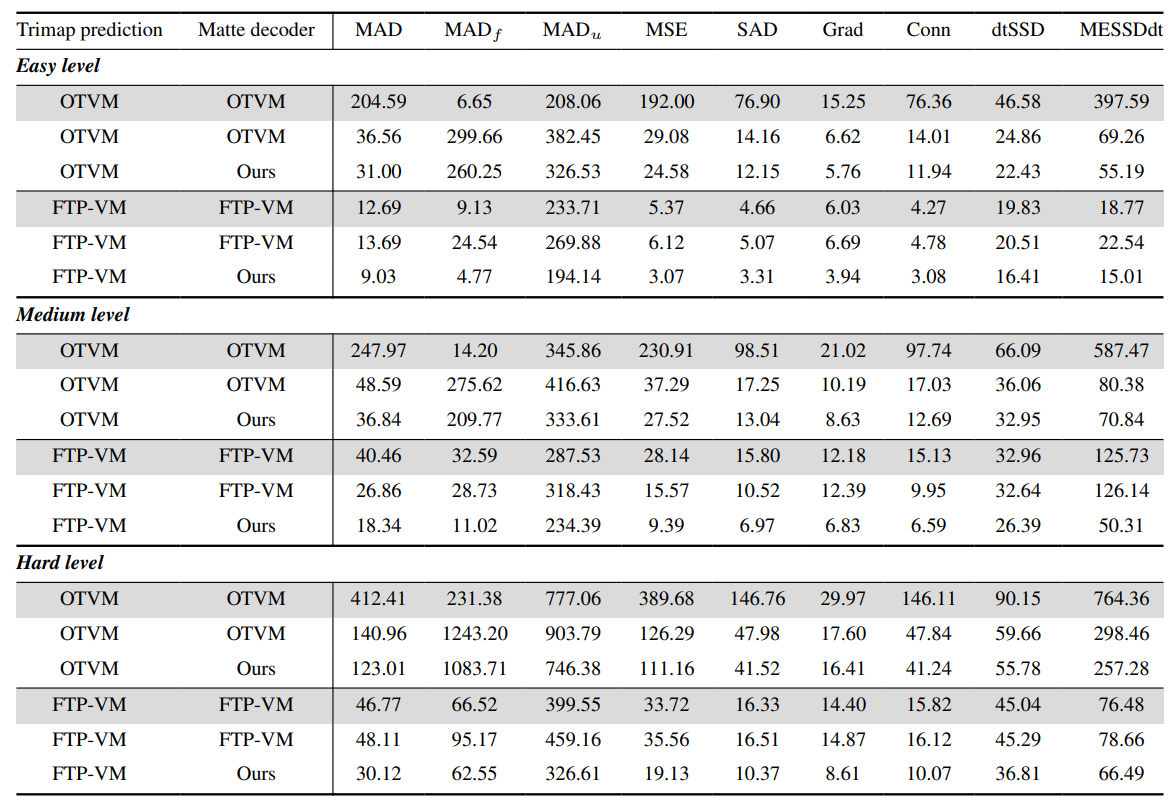

9.3. Quantitative details

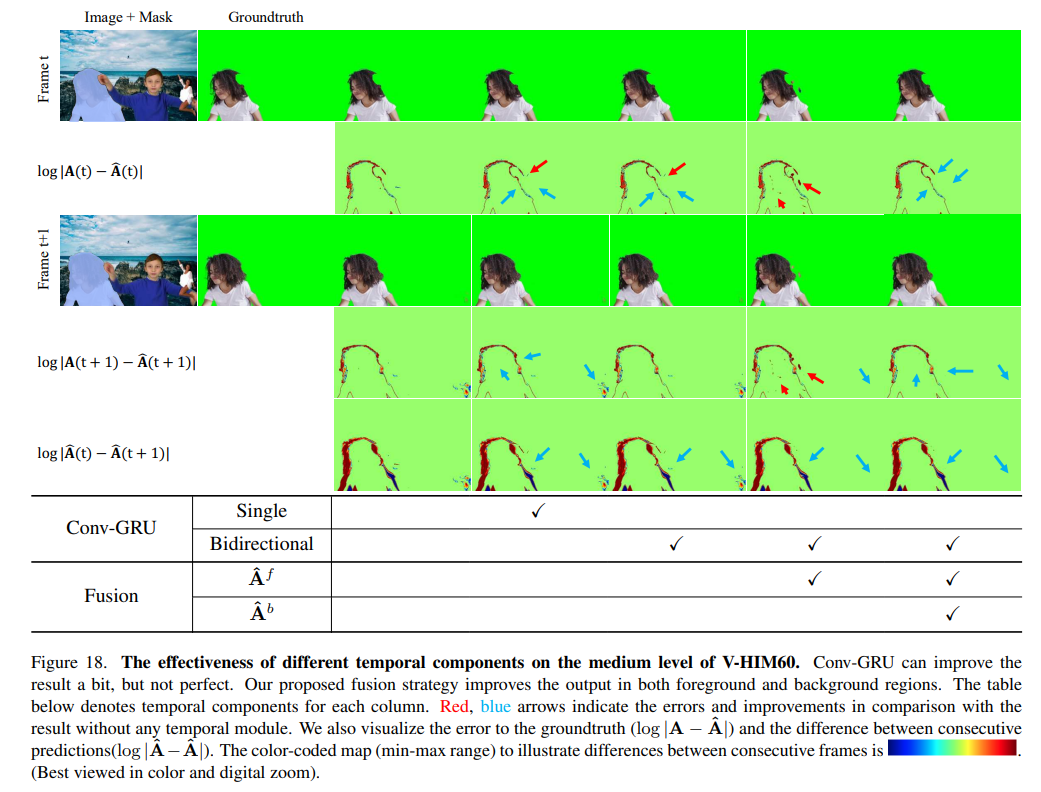

9.4. More qualitative results

9.4. More qualitative results

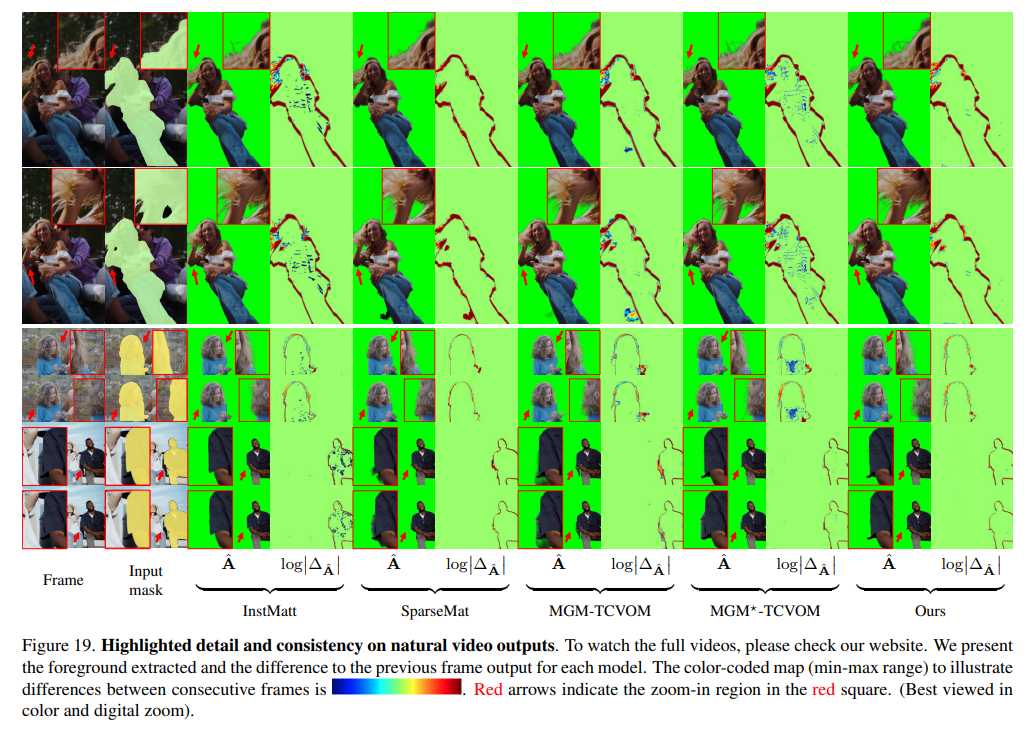

For a more immersive and detailed understanding of our model’s performance, we recommend viewing the examples on our website which includes comprehensive results and comparisons with previous methods. Additionally, we have highlighted outputs from specific frames in Fig. 19.

\ Regarding temporal consistency, SparseMat and our framework exhibit comparable results, but our model demonstrates more accurate outcomes. Notably, our output maintains a level of detail on par with InstMatt, while ensuring consistent alpha values across the video, particularly in background and foreground regions. This balance between detail preservation and temporal consistency highlights the advanced capabilities of our model in handling the complexities of video instance matting.

\ For each example, the first-frame human masks are generated by r101 fpn 400e and propagated by XMem for the rest of the video.

\

\

\

\

:::info Authors:

(1) Chuong Huynh, University of Maryland, College Park (chuonghm@cs.umd.edu);

(2) Seoung Wug Oh, Adobe Research (seoh,jolee@adobe.com);

(3) Abhinav Shrivastava, University of Maryland, College Park (abhinav@cs.umd.edu);

(4) Joon-Young Lee, Adobe Research (jolee@adobe.com).

:::

:::info This paper is available on arxiv under CC by 4.0 Deed (Attribution 4.0 International) license.

:::

\

You May Also Like

Gold Price Hits Record High, Why Is Bitcoin Silent? Analyst Evaluates and Reveals Bitcoin Price Forecast

Lithuania Warns Crypto Firms to Exit or License Before Dec. 31, 2025