MaGGIe vs. Baselines: Quantitative Superiority in Video Instance Matting

Table of Links

Abstract and 1. Introduction

-

Related Works

-

MaGGIe

3.1. Efficient Masked Guided Instance Matting

3.2. Feature-Matte Temporal Consistency

-

Instance Matting Datasets

4.1. Image Instance Matting and 4.2. Video Instance Matting

-

Experiments

5.1. Pre-training on image data

5.2. Training on video data

-

Discussion and References

\ Supplementary Material

-

Architecture details

-

Image matting

8.1. Dataset generation and preparation

8.2. Training details

8.3. Quantitative details

8.4. More qualitative results on natural images

-

Video matting

9.1. Dataset generation

9.2. Training details

9.3. Quantitative details

9.4. More qualitative results

9.3. Quantitative details

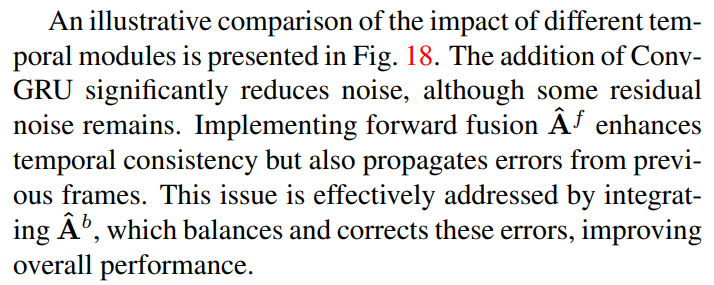

Our ablation study, detailed in Table 13, focuses on various temporal consistency components. The results demonstrate that our proposed combination of Bi-Conv-GRU and forward-backward fusion outperforms other configurations across all metrics. Additionally, Table 14 compares our model’s performance against previous baselines using various error metrics. Our model consistently achieves the lowest error rates in almost all metrics.

\

\ In an additional experiment, we evaluated trimappropagation matting models (OTVM [45], FTP-VM [17]), which typically receive a trimap for the first frame and propagate it through the remaining frames. To make a fair comparison with our approach, which utilizes instance masks for each frame, we integrated our model with these trimappropagation models. The trimap predictions were binarized and used as input for our model. The results, as shown in Table 15, indicate a significant improvement in accuracy when our model is used, compared to the original matte decoder of the trimap-propagation models. This experiment underscores the flexibility and robustness of our proposed framework, which is capable of handling various mask qualities and mask generation methods.

\

:::info Authors:

(1) Chuong Huynh, University of Maryland, College Park (chuonghm@cs.umd.edu);

(2) Seoung Wug Oh, Adobe Research (seoh,jolee@adobe.com);

(3) Abhinav Shrivastava, University of Maryland, College Park (abhinav@cs.umd.edu);

(4) Joon-Young Lee, Adobe Research (jolee@adobe.com).

:::

:::info This paper is available on arxiv under CC by 4.0 Deed (Attribution 4.0 International) license.

:::

\

You May Also Like

PEPE and Memecoins Are Back Where 2,500% Rallies Began

Momentum builds as price holds above 0.6600