Hardware for Heat: The New Wave of Sustainable AI Chips in 2026

Is your AI hardware reaching a physical thermal limit just as your processing needs grow?

In 2026, air cooling is no longer enough for high-density racks that now exceed 100kW. This change has shifted the core business metric from raw speed to “tokens per watt per dollar.” To stay competitive, US enterprises are adopting microfluidic cooling and neuromorphic chips to manage rising heat and power costs.

Read on to learn how to modernize your data center for these new sustainable computing standards.

Key Takeaways:

- The “Thermal Wall” in 2026 pushed 38% of data centers to liquid cooling, which is nearly 4,000 times more efficient than obsolete air cooling.

- Neuromorphic chips offer extreme efficiency, with Intel Loihi 3 operating at 1.2W and being up to 1,000 times more efficient than a GPU for real-time systems.

- The 1.58-bit BitNet architecture reduces LLM energy use by up to 82% on x86 CPUs and requires 16 times less memory by using simple addition.

- Big Tech is making a Nuclear Pivot for constant power, with Meta signing an agreement for over 2,600 megawatts to fuel high-demand AI training clusters.

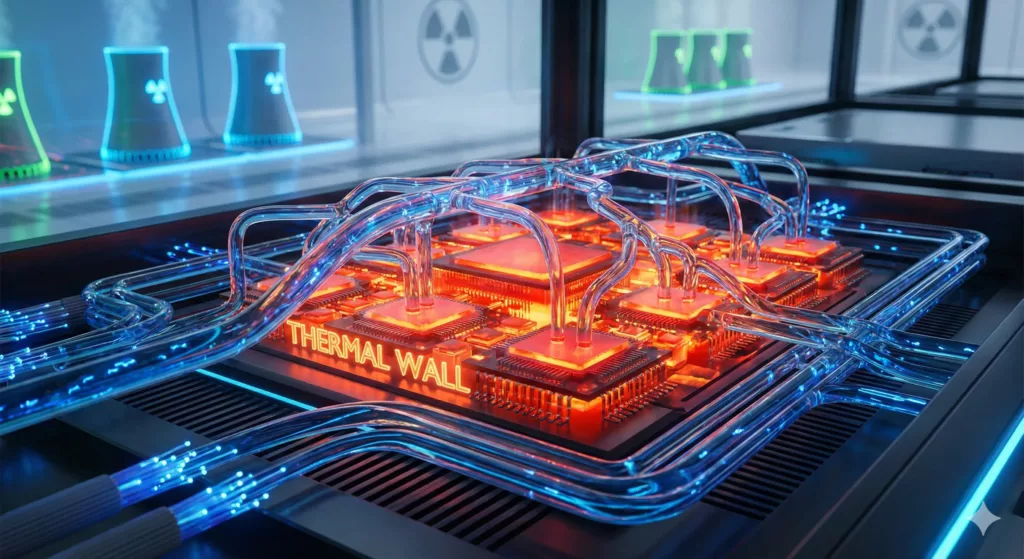

What Is The “Thermal Wall,” And Why Did Air Cooling Fail?

In early 2026, the data center industry hit the “Thermal Wall.” For decades, servers were cooled by blowing air across them. But as AI chips became more powerful, they began to generate more heat than air can physically carry away.

Today, the standard air-cooling method has reached its limit. This shift is not just about better fans; it is a fundamental change in how we build the world’s digital brains.

Defining the Thermal Wall

The Thermal Wall is the point where air cooling fails due to the laws of physics. Air is not a great heat conductor. To cool the latest AI chips, you would need “hurricane-force” winds—airflow so fast it would damage the equipment and be too loud for humans to stand near.

- The 1,000W Barrier: In 2026, high-end AI chips like the NVIDIA Rubin generation have reached a Thermal Design Power (TDP) of 1,800W per chip. This is nearly double the heat of the previous generation.

- The Power Gap: Moving air at these speeds consumes so much energy that the fans themselves can use up 15-20% of a server’s total power. This creates a cycle of waste that air cooling cannot escape.

The Shift to Liquid Cooling

Liquid is nearly 4,000 times more efficient at carrying heat than air. By early 2026, 38% of global data centers have made the switch to liquid cooling—up from just 20% in 2024.

Liquid cooling allows for “hyper-dense” racks. Because components don’t need gaps for airflow, they can be packed tightly together. This reduces the size of the data center and lowers the amount of cabling needed, which makes the AI faster.

Thermal Management Technologies (2026)

The market has split into several tiers based on how much power a rack consumes:

| Technology | Max Rack Density | 2026 PUE | Best Use Case |

| Advanced Air | < 35 kW | 1.3 – 1.5 | Legacy office servers |

| Direct-to-Chip (D2C) | 40 – 100 kW | 1.1 | Large AI Training |

| Immersion (1-Phase) | > 100 kW | 1.02 | High-Density Inference |

| Microfluidic | Extreme | ~1.01 | Next-Gen 3D Chips |

Direct-to-Chip (D2C) vs. Immersion

D2C is the current workhorse of the industry. It uses copper plates with tiny internal “micro-fins” attached directly to the chip. Coolant flows through these plates to pull heat away at the source. This is the standard for major AI factories run by companies like Microsoft and Amazon.

Immersion cooling involves dunking the entire server into a tank of special liquid (dielectric fluid). In 2026, the industry has shifted toward Single-Phase immersion. This uses stable, non-toxic oils rather than the “forever chemicals” (PFAS) used in older systems, which are now facing strict bans in the US and EU.

Microfluidic Cooling: The Silicon Frontier

The most radical change is Microfluidic Cooling. Instead of cooling the outside of the chip, liquid flows inside the silicon itself.

- How it works: Tiny channels, as thin as a human hair, are etched directly into the back of the silicon. This allows the coolant to touch the active parts of the chip directly.

- The Result: Microsoft lab tests show this method is 3 times better than current cold plates. It reduces the internal temperature of a GPU by 65%. This technology is essential for “3D chips,” where memory and processors are stacked on top of each other, trapping heat in the middle layers.

What Silicon Architectures Define The 2026 AI Era?

The NVIDIA Rubin Platform and System-Level Efficiency

The NVIDIA Rubin platform, launched at CES 2026, is the new benchmark for sustainable AI. It moves beyond the chip-centric design of the Blackwell era to a fully unified “AI Factory” engine.

- Extreme Codesign: Rubin integrates the Vera CPU, the Rubin GPU, and the NVLink 6 switch into a single cohesive system. By using high-speed NVLink-C2C interconnects, the energy cost of moving data between processors has dropped by 5x.

- Tokens Per Watt: Rubin targets a 10x reduction in inference token cost compared to Blackwell. It can train massive Mixture-of-Experts (MoE) models using 4x fewer GPUs.

- Smart Schedulers: Rubin’s hardware is optimized for “sparse” MoE models. It power-gates inactive parts of the silicon, effectively eliminating “dark silicon” waste during reasoning tasks.

The Rise of Wide-Bandgap Semiconductors (GaN/SiC)

To handle rack densities that now exceed 120kW, the industry has shifted to 800V High Voltage Direct Current (HVDC) power distribution. This is made possible by Wide-Bandgap materials like Gallium Nitride (GaN).

- Efficiency Gains: GaN power stages achieve over 98% efficiency. In a 100kW rack, this saves 2kW of heat—the equivalent of a large space heater—that would otherwise strain the cooling system.

- Density: GaN allows for much higher switching frequencies, which shrinks the size of Power Supply Units (PSUs). This allows power delivery to be mounted directly onto liquid-cooled cold plates alongside the processors.

- Copper Savings: Moving to 800V reduces resistive losses and allows for 45% thinner copper wiring, significantly lowering the embodied carbon of the data center’s electrical grid.

Custom ASICs and Domain Specificity

While NVIDIA leads in general training, the inference market—running models for users—is dominated by Application-Specific Integrated Circuits (ASICs).

- Hyperscale Custom Silicon: Google’s Ironwood TPU (v7), Amazon’s Trainium3, and Microsoft’s Maia 200 are designed for specific cloud workloads. By stripping away general-purpose logic, these chips consume 30–50% less power per inference than general GPUs.

- Intel Crescent Island: Intel’s 2026 entry, code-named Crescent Island, targets the “Tokens-as-a-Service” market. It features 160GB of LPDDR5X memory and is built on the Intel 18A process. By prioritizing memory bandwidth over raw compute, it provides a lower-power, cost-optimized alternative for running models like Llama 4 or GPT-5.

How Does Neuromorphic Computing Achieve Brain-Like Efficiency?

Escaping the Von Neumann Bottleneck

In 2026, the data center industry is escaping the Von Neumann Bottleneck. For decades, computers have wasted energy moving data back and forth between a processor (CPU) and memory (RAM). Neuromorphic computing solves this by mimicking the human brain, where memory and processing are located in the same spot.

The 2026 Commercial Breakthrough

While neuromorphic chips were once research projects, 2026 marks their move into mass production. Two major platforms now lead the market for “brain-like” efficiency.

- Intel Loihi 3: Released in January 2026, this chip is built on a 4nm process. It features 8 million digital neurons and 64 billion synapses.

- Graded Spikes: Unlike older chips that used simple “on/off” signals, Loihi 3 uses 32-bit “graded spikes.” This allows it to process complex data in a single pulse.

- Efficiency: At a peak load of just 1.2W, it is up to 1,000 times more efficient than a GPU for real-time tasks like drone navigation or robotic grip control.

- IBM NorthPole: IBM has moved NorthPole into full production for 2026. This chip eliminates external RAM entirely, placing all memory directly on-chip.

- Performance: NorthPole is 25 times more energy-efficient than the NVIDIA H100 for vision tasks like image recognition.

- Edge Power: It can run 3-billion parameter models with sub-millisecond latency, allowing small edge servers to perform “reasoning” without a cloud connection.

Event-Based Processing and “Sparsity”

The secret to these energy savings is event-based processing. Standard cameras and GPUs process every pixel in every frame, even if nothing is moving. This wastes power on static backgrounds.

Neuromorphic systems only “fire” when something changes.

- Asynchronous Action: If a security camera watches an empty hallway, a neuromorphic processor consumes near-zero power. It only wakes up when it detects motion.

- Robotics Impact: The ANYmal D Neuro—a 2026 inspection robot using Loihi 3—can run for 72 hours on one charge. This is a ninefold improvement over older models that relied on power-hungry GPUs.

This “temporal sparsity” allows AI to be “always-on” without draining batteries, making it the primary choice for the 2026 “trillion-sensor” economy.

How Do Light And Photonics Solve The I/O Energy Crisis?

The Limits of Copper

As chip logic becomes faster, the bottleneck shifts to the physical wires connecting them. In 2026, transmitting high-speed data over copper wires has reached a breaking point. Copper suffers from “insertion loss,” meaning the signal fades as it travels. To fix this, systems use “Retimers” that eat up nearly 30% of a rack’s total power.

Silicon Photonics (SiPh) and Co-Packaged Optics

Silicon Photonics replaces electricity with light (photons). Unlike electrons, photons generate no heat when they travel through a waveguide and can carry data over much longer distances with almost zero signal loss.

The big breakthrough of 2026 is Co-Packaged Optics (CPO).

- The Old Way: Optical modules were “pluggables” that you stuck into the front of a switch. This required a long copper path from the chip to the port.

- The 2026 Way: The optical engine is mounted directly on the same base as the GPU or Switch chip. This brings the light interface within millimeters of the “brain,” cutting the power needed for data transport by 5x.

NVIDIA Spectrum-X Ethernet Photonics

NVIDIA’s Spectrum-X Ethernet Photonics, released for the Rubin platform in early 2026, is the flagship of this technology.

- Efficiency: It delivers a 3.5x to 5x improvement in power efficiency compared to traditional pluggable switches.

- Resiliency: By removing failure-prone pluggable parts, the system achieves 10x better network resiliency.

- Bandwidth: The SN6800 switch provides a staggering 409.6 Tb/s of total bandwidth. It can support up to 512 ports at 800 Gb/s in a single, space-efficient chassis.

Why SiPh is “Green”

Silicon photonics is a “Green IT” milestone because it decouples bandwidth from power consumption. In old copper systems, doubling your speed usually meant more than doubling your power use.

In a photonic system, you can increase bandwidth simply by adding more “colors” of light (Wavelength Division Multiplexing) through the same fiber. This adds massive capacity with almost no extra energy penalty. It is the only way to connect the millions of GPUs needed for 2026 “AI Superfactories” without melting the local power grid.

How Is Software, Like The 1-Bit Revolution, Making AI Sustainable?

While hardware defines the ceiling of what is possible, software defines how much of that potential we actually reach. In 2026, the industry has shifted from “bigger is better” to “efficient is essential.”

The 1-Bit Revolution: BitNet b1.58

A major milestone in early 2026 is the widespread use of 1.58-bit Large Language Models (LLMs), based on Microsoft’s BitNet architecture. Traditional models use 16-bit or 32-bit numbers for every “weight” in their brain. BitNet restricts these weights to just three values: -1, 0, or 1.

- No More Multiplication: This change is profound. AI chips no longer need to perform complex multiplication, which is power-hungry. Instead, they use simple addition and subtraction.

- The Energy Drop: On standard x86 CPUs, BitNet reduces energy use by up to 82%. On ARM-based chips (like those in smartphones), energy use drops by 70%.

- Memory Freedom: A 1-bit model uses 16 times less memory than a standard model. This allows a 100-billion parameter model to run on a single high-end CPU at speeds comparable to human reading.

Green MLOps: The AI Energy Score

In 2026, developers no longer choose models based only on accuracy. They use the AI Energy Score, a standardized rating system launched by Hugging Face and Salesforce.

- The V2 Leaderboard: The latest update in late 2025 introduced “Reasoning” as a benchmarked task. The data revealed a harsh truth: Reasoning models use 30 times more energy on average than standard models.

- Smart Routing: Companies now use “AI Routers.” If a user asks a simple question (e.g., “What time is it?”), the router sends it to a low-energy Class A model. It only uses a power-hungry “reasoning” model for complex math or coding.

- Carbon-Aware SDKs: The Green Software Foundation has released version 1.5 of its Carbon-Aware SDK. This tool allows apps to “follow the sun.” A non-urgent AI training job will automatically pause when the local grid is powered by coal and resume when solar or wind energy is high.

Consumer Visibility and “Green SEO”

The environmental cost of AI is now visible to the public. As “AI Search” replaces traditional keyword search, the energy impact has become a talking point.

- Search Energy Gap: A single AI search query consumes 2.9 watt-hours (Wh)—nearly 10 times the 0.3 Wh used by a standard Google search. If a user uploads a long document for the AI to “read,” that single query can jump to 40 Wh, enough to power an LED bulb for four hours.

- Digital Labels: In 2026, major platforms have introduced “Energy Transparency Labels” on AI tools. This has sparked a “Green SEO” movement, where developers compete to create the most “lightweight” digital experiences to earn higher rankings in sustainability-focused search filters.

What Infrastructure Changes Are Needed For A Circular AI Strategy?

As we move through 2026, the strategy for “Green AI” has shifted from simply buying offsets to changing the physical reality of the grid and the supply chain.

The Nuclear Pivot

AI training clusters run 24/7, making them incompatible with intermittent energy like solar and wind. To meet this demand, Big Tech has sparked a Nuclear Renaissance.

- The Crane Clean Energy Center: Microsoft’s landmark 20-year deal with Constellation Energy has officially put the restart of Three Mile Island Unit 1 on a fast track for 2027. In early 2026, the facility is nearly 80% staffed, and reactor operators have begun the licensing process.

- Meta and Vistra: Meta recently signed a massive 20-year agreement with Vistra for over 2,600 megawatts of nuclear power.

- Small Modular Reactors (SMRs): Google, Amazon, and Meta are now investing in “Advanced Nuclear” startups like Oklo, TerraPower, and X-energy. These SMRs are designed to be built directly next to data centers, providing carbon-free power without relying on the public grid.

Scope 3: The Manufacturing Frontier

With operational power becoming “clean,” the industry’s focus has turned to Scope 3—the carbon embedded in hardware.

- The 2nm Challenge: Creating the latest AI chips is energy-intensive. A single EUV lithography machine used to etch 2nm chips can consume up to 1 megawatt of power.

- Tracking embodied Carbon: In 2026, the AWS Customer Carbon Footprint Tool and Google’s Carbon Sense Suite provide real-time data on the manufacturing emissions of your specific server instances. This allows CIOs to choose hardware based on its total lifecycle impact, not just its electricity bill.

Industrial Decarbonization: The AI Dividend

We must weigh the energy AI uses against the energy it saves. This “Green Dividend” is now visible in two major areas:

- AI-Designed Concrete: Meta has open-sourced an AI tool that discovers low-carbon concrete formulas. By using Bayesian optimization, the AI identifies mixes that use 35% less carbon and cure faster than standard cement. Meta is now using these formulas to build its own data centers in locations like Rosemount, Minnesota.

- Smart Grid Management: In 2026, AI agents are the primary managers of the “Smart Grid.” They balance millions of EVs and rooftop solar panels in real-time. This has improved grid reliability by 15% and reduced the need for “peaker” fossil fuel plants during heatwaves.

By 2026, we are seeing the rise of the Circular Data Center: AI designs the low-carbon materials used to build the facility, which is then powered by carbon-free nuclear energy to run the AI that optimizes the local power grid.

Conclusion:

In 2026, IT performance is measured by more than speed. Success now depends on your Carbon ROI. Building efficient systems is the only way to stay financially stable in a carbon-constrained world. Use real-time data tools to track energy use across your stack. Apply shadow carbon pricing to every hardware purchase to stress-test your decisions. Engineering teams must focus on reducing “energy per unit of work” for every task. High efficiency is the best path to long-term growth.

Vinova develops MVPs for tech-driven businesses. We help you build the sustainable, high-performance systems your company needs. Our team handles the technical complexity so you can reach your net-zero goals faster. We turn energy-heavy legacy systems into modern, efficient tools.

Contact Vinova today to start your MVP development. Let us help you build a high-performance, net-zero product for the modern market.

FAQs:

How does microfluidic cooling solve AI chip heat issues?

Microfluidic cooling is a radical technology that moves beyond cooling the outside of the chip. It works by:

- Coolant Inside the Silicon: Tiny channels, as thin as a human hair, are etched directly into the back of the silicon, allowing the coolant to touch the active parts of the chip directly.

- Superior Efficiency: Microsoft lab tests show this method is 3 times better than current cold plates and reduces a GPU’s internal temperature by 65%.

- Enabling 3D Chips: It is essential for “3D chips,” where memory and processors are stacked, as it prevents heat from being trapped in the middle layers.

What are the most energy-efficient AI chips in 2026?

The chips with the most significant efficiency gains are:

- Intel Loihi 3 (Neuromorphic): At a peak load of just 1.2W, it is up to 1,000 times more efficient than a GPU for real-time tasks like drone navigation.

- IBM NorthPole (Neuromorphic): It is 25 times more energy-efficient than the NVIDIA H100 for vision tasks.

- Custom ASICs (e.g., Google’s Ironwood TPU, Amazon’s Trainium3, Microsoft’s Maia 200): These Application-Specific Integrated Circuits consume 30–50% less power per inference than general GPUs by stripping away general-purpose logic.

Can neuromorphic computing replace traditional GPUs for sustainability?

Neuromorphic computing offers a paradigm shift in efficiency for specific workloads:

- Energy Efficiency: Neuromorphic chips are significantly more efficient for real-time, event-based tasks. The Intel Loihi 3 is up to 1,000 times more efficient than a GPU for tasks like robotic control.

- Event-Based Processing: They achieve this by only “firing” when something changes, a concept called temporal sparsity. This eliminates the waste of standard GPUs, which process every pixel even in static scenes.

- Specific Use Cases: This makes them the primary choice for the “trillion-sensor” economy, excelling in areas like robotics and real-time vision. While they offer superior efficiency for these specialized tasks, general-purpose GPUs and ASICs still dominate the general training and hyperscale inference markets.

Why is silicon photonics considered a “green” computing technology?

Silicon Photonics (SiPh) is a “Green IT” milestone because it fundamentally changes how data is moved:

- Zero Heat Transfer: It replaces electricity with light (photons), which generates no heat and carries data over longer distances with almost zero signal loss, eliminating the need for power-hungry Retimers.

- Decouples Bandwidth from Power: It is considered green because it decouples bandwidth from power consumption. You can increase network capacity by adding more “colors” of light (Wavelength Division Multiplexing) through the same fiber with almost no extra energy penalty, unlike traditional copper systems.

What is the “Thermal Wall” in 2026 AI development?

The Thermal Wall is the point where the standard air-cooling method fails due to the laws of physics.

- The Limit of Air: As AI chips became more powerful, they began to generate more heat than air can physically carry away. To cool modern chips, the required airflow would be “hurricane-force,” which would damage equipment.

- Waste Cycle: High-end AI chips like the NVIDIA Rubin generation reached a Thermal Design Power (TDP) of 1,800W per chip. The fans needed to move air at these speeds consume 15–20% of a server’s total power, creating a cycle of waste that air cooling cannot escape.

- The Shift: Hitting this limit triggered a fundamental shift to liquid cooling, which is nearly 4,000 times more efficient at carrying heat than air.

You May Also Like

Vitalik Buterin Pushes Ethereum Builders to Move Beyond Clone Chains

Vitalik: Calls for genuine innovation rather than replication, emphasizing consistency between words and deeds in the "connection with Ethereum."