A board’s expectations on AI strategy and governance

By Erika Fille T. Legara

AS ARTIFICIAL INTELLIGENCE (AI) becomes increasingly embedded in everyday business tools, boards of directors face a subtle but critical governance challenge. AI is no longer confined to bespoke systems or advanced analytics platforms. Today, even commonly used applications, such as productivity software, enterprise systems, and customer platforms, come with AI-enabled capabilities by default.

This reality requires boards to recalibrate their perspective. AI should no longer be viewed solely as a stand-alone technology initiative, but as a capability layer increasingly woven into core business processes and decision-making. Consequently, AI governance is no longer about overseeing a few “AI projects,” but about ensuring that AI-enabled decisions across the organization remain aligned with strategy, risk appetite, and ethical standards.

As with financial stewardship, effective AI oversight demands clarity, accountability, and proportionality. Boards must therefore shape their expectations

of management accordingly. A well-governed organization treats AI as both a strategic enabler and a governance concern. Management’s role then is twofold; that is, to leverage AI in pursuit of enterprise objectives, while acting as stewards of the risks that accompany automation, data-driven decisions, and algorithmic scale. Regardless of formal structure or title, those accountable for AI must be both strategist and steward, translating AI capabilities into business value, and governance principles into operational discipline.

Outlined below are key areas where boards should focus their oversight.

1. A clear definition of what constitutes an AI system. Boards should be clear on what management considers an “AI system” for governance purposes. As AI capabilities are increasingly embedded in standard software, not all AI warrants the same level of oversight.

A practical approach distinguishes between embedded or low-risk AI features and material AI systems.

Embedded or low-risk AI features are typically bundled into commonly used tools and support routine tasks. Examples include AI-assisted spelling or document

summarization in productivity software, automated meeting transcription, e-mail prioritization, or basic chatbots that route customer inquiries without making

binding decisions. In the Philippine context, this might include AI-enabled language translation in customer service platforms or automated data entry in back-office operations. These features generally enhance efficiency and user experience and can often be governed through existing IT, procurement, and data policies.

By contrast, material AI systems influence consequential decisions or outcomes. Examples include AI used in credit approval, pricing, fraud detection, hiring or performance evaluation, customer eligibility, underwriting, claims assessment, or predictive models that materially affect financial forecasts or risk exposure. For Philippine firms, this may also encompass AI systems used in remittance verification, know-your-customer (KYC) processes, or algorithmic lending decisions that affect financial inclusion outcomes. These systems introduce financial, regulatory, ethical, or reputational risk and therefore require stronger controls, clearer accountability, and board-level visibility. This keeps governance focused on what actually matters, without impeding operational efficiency or innovation.

2. AI strategy as a guide for technology, data, and capability investments. Boards should verify that AI initiatives, whether embedded or stand-alone, are explicitly linked to enterprise strategy, both in strategic intent and investment allocation. A clear AI strategy serves as a critical guide for technology and capital allocation decisions, so that investments are coherent rather than opportunistic.

Management should be able to explain how AI ambitions translate into concrete requirements for enterprise data platforms, system architecture, and enabling infrastructure. This includes clarity on data availability and quality, integration across core systems, architectural choices that allow AI systems to be scaled and governed, and decisions around cloud, on-premise, or hybrid environments.

Equally important, boards should look for alignment between AI strategy and capability development. This includes investments in talent, operating models, governance processes, and decision workflows necessary to use AI responsibly and effectively. AI value is realized not through tools alone, but through organizations prepared to absorb, trust, and act on AI-enabled insights.

Without a clear AI strategy, technology investments risk becoming fragmented, duplicative, or misaligned with business priorities. With it, boards gain confidence that data, platforms, and capabilities are being built deliberately to support both innovation and control.

3. Governance structures, accountability, and decision rights. Boards should satisfy themselves that AI-related decisions are clearly governed, escalated, and owned. This includes clear accountability for AI strategy, deployment, and ongoing oversight, regardless of formal titles or organizational design.

In practice, governance gaps often surface through unintended consequences. For example, an AI-enabled pricing or eligibility system may unintentionally disadvantage certain customer groups, triggering reputational or regulatory concerns without any explicit change in board-approved policy. Similarly, AI-supported hiring or performance evaluation tools may introduce bias into people decisions, despite being perceived as objective or neutral.

These outcomes rarely stem from malicious intent. More often, they reflect unclear decision rights, insufficient oversight, or assumptions that embedded AI tools fall outside formal governance.

Not all organizations require a dedicated AI governance council. For larger or more complex enterprises, a cross-functional council may help coordinate strategy, risk,

ethics, and compliance. For smaller organizations, existing structures, such as audit, risk, or technology committees, may be sufficient.

What matters is not the structure itself, but the clarity of decision rights, escalation paths, and accountability. Boards should be able to answer a simple question: When an AI-enabled decision produces unintended consequences, who is accountable, and how the issue reaches the board?

Equally important, boards should articulate the organization’s risk appetite for AI-enabled decisions. This includes defining acceptable levels of automation in critical processes, establishing thresholds for human oversight, and clarifying which types of decisions should remain subject to human judgment regardless of AI capability.

4. Risk management, assurance, and board oversight. In practice, boards need to understand how AI risks are identified, assessed, and managed with the same rigor applied to other model-driven and judgment-intensive systems. This includes governance frameworks covering data quality, assumptions, human oversight, third-party dependencies, bias and fairness, cybersecurity, and regulatory compliance.

A useful reference point for many boards is how Expected Credit Loss (ECL) models are governed. While ECL models are not AI systems, boards are familiar with the discipline applied to their oversight: clear ownership, documented assumptions, model validation, stress testing, management overlays, and regular challenge by audit and risk committees. For boards less familiar with ECL, comparable governance rigor can be seen in how organizations manage cybersecurity frameworks, third-party risk management programs, or business continuity planning — all areas requiring ongoing monitoring, independent

validation, and escalation protocols.

Similarly, material AI systems should be subject to defined approval processes, ongoing monitoring, and independent review. At board level, this means having visibility into how AI models are performing, what assumptions they rely on, how exceptions are handled, and how outcomes are tested against real-world results.

Internal audit, risk management, and compliance functions should be equipped to review AI controls and escalate concerns appropriately. As with ECL, complexity

should not reduce scrutiny; rather, it should increase the level of governance and assurance applied.

The path forward requires boards to balance innovation with appropriate oversight, by asking informed questions, establishing clear expectations, and ensuring management has the accountability frameworks needed to govern AI responsibly.

As AI becomes a pervasive feature of modern organizations, governance maturity, not technological sophistication, will distinguish responsible adopters from risky ones. Management may deploy AI capabilities and committees may oversee specific risks, but ultimate accountability remains with the board of directors. In an environment where AI is increasingly everywhere, informed and proportionate oversight is no longer optional. It is a core duty of good governance.

Erika Fille T. Legara is a scientist, educator, and data science and AI practitioner working across government, academia, and industry. She is the inaugural managing director and chief AI and data officer of the Philippine Center for AI Research, and an associate professor and Aboitiz chair in Data Science at the Asian Institute of Management, where she founded and led the country’s first MSc in Data Science program from 2017 to 2024. She serves on corporate boards, is a fellow of the Institute of Corporate Directors, an IAPP Certified AI Governance Professional, and a co-founder of a technology company.

You May Also Like

Here’s What $100 in Dogecoin (DOGE) Will Be Worth by the End of 2025 Compared to Solana (SOL) and Little Pepe (LILPEPE)

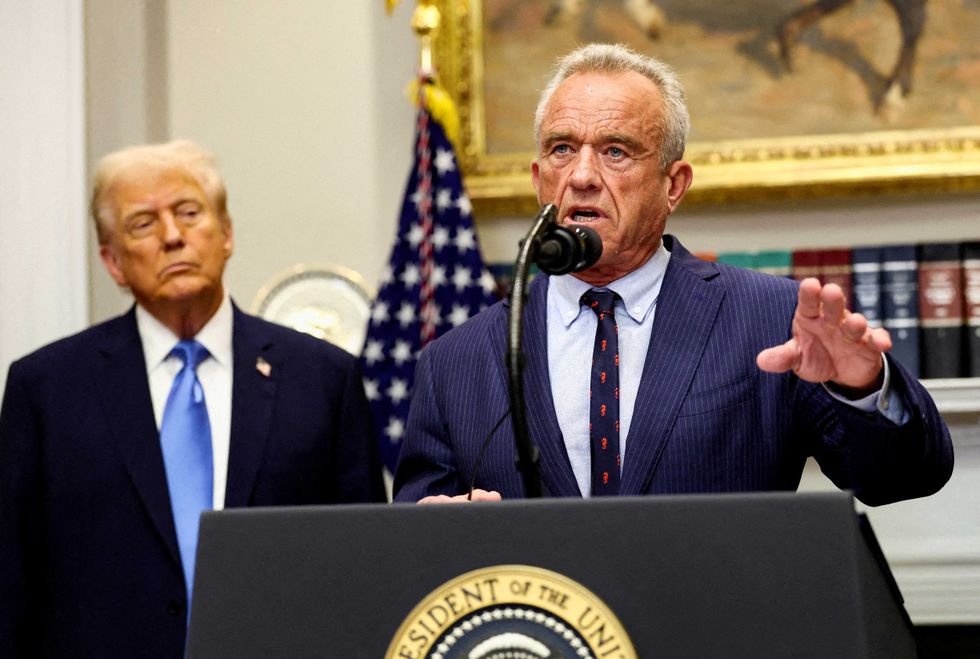

RFK Jr. reveals puzzling reason why he loves working for Trump