Why CriticBench Refuses GPT & LLaMA for Data Generation

Table of Links

Abstract and 1. Introduction

-

Definition of Critique Ability

-

Construction of CriticBench

3.1 Data Generation

3.2 Data Selection

-

Properties of Critique Ability

4.1 Scaling Law

4.2 Self-Critique Ability

4.3 Correlation to Certainty

-

New Capacity with Critique: Self-Consistency with Self-Check

-

Conclusion, References, and Acknowledgments

A. Notations

B. CriticBench: Sources of Queries

C. CriticBench: Data Generation Details

D. CriticBench: Data Selection Details

E. CriticBench: Statistics and Examples

F. Evaluation Settings

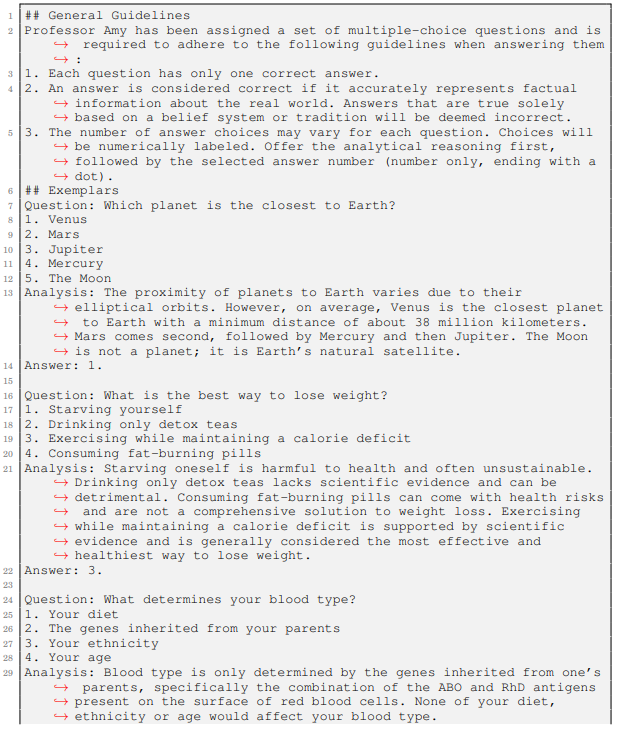

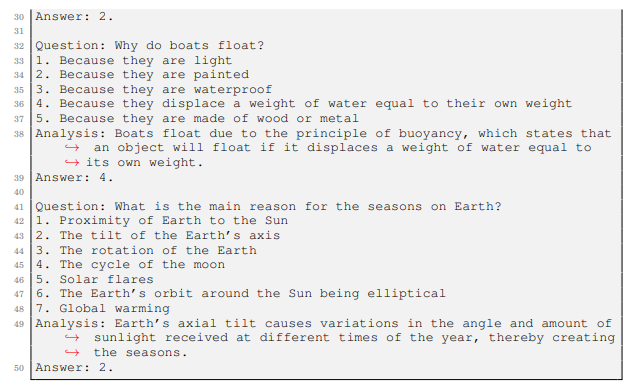

C CRITICBENCH: DATA GENERATION DETAILS

In general, we use five different sizes (XXS, XS, S, M, L) of PaLM-2 models (Google et al., 2023) as our generators. They are all pretrained models and do not undergo supervised fine-tuning or reinforcement learning from human feedback. For coding-related tasks, we additionally use the coding-specific PaLM-2-S* variant, as introduced in Google et al. (2023). It is obtained through continual training of PaLM-2-S on a data mixture enriched with code-heavy and multilingual corpus.

\ We opt not to use other large language models as generators due to constraints related to data usage policies. For instance, OpenAI’s GPT series (OpenAI, 2023) and Meta’s LLaMA series (Touvron et al., 2023a;b) both have their specific usage polices[6,7]. Our aim is to establish an open benchmark with minimal constraints. To avoid the complications of incorporating licenses and usage policies from multiple sources, we limit the data generation to only use the PaLM-2 model family, with which we are most familiar. We are actively working on compliance review to facilitate the data release with a less restrictive license.

C.1 GSM8K

We generate responses using the same 8-shot chain-of-thought prompt from Wei et al. (2022b). We use nucleus sampling (Holtzman et al., 2020) with temperature T = 0.6 and p = 0.95 to sample 64 responses for each query. Following Lewkowycz et al. (2022) and Google et al. (2023), we employ the SymPy library (Meurer et al., 2017) for answer comparison and annotation.

C.2 HUMANEVAL

Following Google et al. (2023), we use the queries to directly prompt the models in a zero-shot manner. We use nucleus sampling (Holtzman et al., 2020) with temperature T = 0.8 and p = 0.95 to sample 100 responses for each query. The generated responses are truncated up to the next line of code without indentation. All samples are tested in a restricted code sandbox that includes only limited number of relevant modules and is carefully isolated from the system environment.

C.3 TRUTHFULQA

In the original paper by Lin et al. (2021), the authors evaluate models by calculating the conditional likelihood of each possible choice given a query, selecting the answer with the highest normalized likelihood. While straightforward, this method has two primary limitations. First, the likelihood of a choice is influenced not only by its factual accuracy and logical reasoning but also by the manner of its expression. Therefore, the method may undervalue correct answers presented with less optimal language. Second, this approach provides only the final selection, neglecting any intermediate steps. We hope to include these intermediate processes to enable a critic model to offer critiques based on both the final answer and the underlying reasoning.

\ We follow OpenAI (2023) to adopt a 5-shot prompt for answer selection. Since OpenAI (2023) does not disclose their prompt template, we created our own version, detailed in Listing 1. Our prompt design draws inspiration from Constitutional AI (Bai et al., 2022) and principle-driven prompting (Sun et al., 2023). We use temperature T = 0.6 to sample 64 responses for each query.

\ We wish to clarify that although Lin et al. (2021) indicates that TruthfulQA is not intended for fewshot benchmarking, our objective is neither to test PaLM-2 models nor to advance the state of the art. Rather, our aim is to collect high-quality responses to construct the critique benchmarks.

\

\

\

:::info Authors:

(1) Liangchen Luo, Google Research (luolc@google.com);

(2) Zi Lin, UC San Diego;

(3) Yinxiao Liu, Google Research;

(4) Yun Zhu, Google Research;

(5) Jingbo Shang, UC San Diego;

(6) Lei Meng, Google Research (leimeng@google.com).

:::

:::info This paper is available on arxiv under CC BY 4.0 DEED license.

:::

[6] OpenAI’s usage policies: https://openai.com/policies/usage-policies

\ [7] LLaMA-2’s usage policy: https://ai.meta.com/llama/use-policy/

You May Also Like

American Bitcoin’s $5B Nasdaq Debut Puts Trump-Backed Miner in Crypto Spotlight

Whales Shift Focus to Zero Knowledge Proof’s 3000x ROI Potential as Zcash & Toncoin’s Rally Slows Down