Your AI investment remains a liability until your organization can orchestrate when intelligent systems fail.

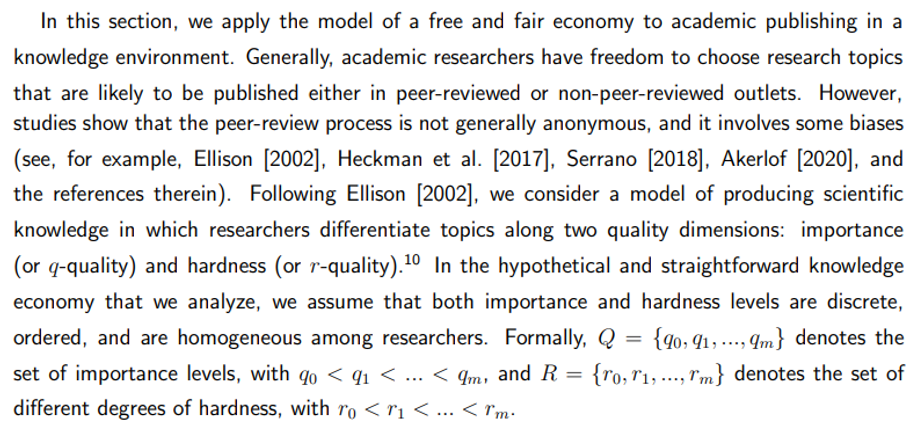

Here are global risk projections for 2024 as tensions in the Middle East escalate and major economies face elections this year. Data source: WEF’s Global Risks Report 2024. (Graphic by Visual Capitalist via Getty Images)

Getty Images

Executives across industries are rightly proud of their AI investments, but few fully acknowledge the new risk landscape those systems create. Manufacturers report double-digit improvements in quality control. Healthcare leaders see enhanced diagnostic precision. Financial institutions reduce fraud losses. Energy operators achieve unprecedented grid optimization.

Then I ask a simple question: “What happens when your AI flags an anomaly it wasn’t trained to recognize?”

The room goes quiet.

Because that’s where the hidden cost—and hidden risk of AI lives—not in the model itself, but in the moments when intelligent systems encounter situations outside their training data and the organization can’t interpret, decide, or act fast enough. AI doesn’t eliminate operational risk. It moves it, and often magnifies its consequences.

The New Risk Point in AI-Driven Operations

At Stanford’s Center for International Security and Cooperation (CISAC), where I studied systemic failure across nuclear risk, biosecurity, AI safety, and cyber-physical cascades, one pattern kept reappearing: technology rarely fails catastrophically; systemic failure almost always begins with how humans interpret early signals. Human response does. Recent Forbes reporting on global systemic vulnerabilities reinforces this pattern, showing how intelligent systems increasingly fail at the edges—where training data ends and operational risk begins (Snyder, 2025).

Across sectors, the specifics differ but the pattern does not. In advanced manufacturing, autonomous inspection systems flag defect patterns no engineer has seen before. In hospital ICUs, clinical decision support tools identify contradictory treatment pathways. In financial markets, anomaly-detection algorithms surface irregularities that could signal emerging instability. In energy systems, grid-optimization AI predicts load failures that may cascade across regions. In logistics, routing algorithms encounter conditions never seen in training data. In aerospace and defense, autonomy supervisors must decide when to override systems behaving unexpectedly.

In each case, someone must make a judgment call under time pressure: false alarm or systemic failure? These are decisions that directly determine operational resilience, and they do little to build true workforce capability. Misjudge, and the consequences escalate—from operational interruptions to regulatory exposure, safety risks, or large-scale outages.

The AI did what it was designed to do. The organization’s human orchestration capability—the ability to recognize, interpret, and respond—determined the outcome.

Why Enterprise Training Misses Critical Risk Signals

Most companies invest heavily in what can be called baseline capability: teaching teams to use AI-augmented tools safely and correctly. These are essential skills, but they focus on operation, not orchestration.

Across my work with more than 300 corporations in manufacturing, healthcare, energy, finance, logistics, and aerospace, the distribution of training investment rarely varies: the vast majority goes toward baseline operational proficiency, with far less allocated to cross-functional integration and adaptive decision-making.

Executives then wonder why teams “follow procedures perfectly but hesitate when AI behaves unexpectedly.” Forbes has recently documented how workforce uncertainty increases as AI systems surface new kinds of warnings that demand interpretation rather than automation (Wells, 2025).

The capabilities organizations routinely underinvest in—especially those essential for effective human-AI collaboration—include:

- Systems Thinking — seeing patterns and implications across interconnected processes

- Human–AI Coordination — knowing when algorithms require human override

- Cross-System Orchestration — managing multiple intelligent systems simultaneously

- Risk Navigation — anticipating cascading failures before they start

- Adaptive Leadership — making decisions under uncertainty when no algorithm has context

These are not traditional “soft skills.” They are enterprise-critical capabilities that determine whether AI creates value or amplifies risk.

Rethinking the Metric That Matters for Risk

Executives often assume that more training hours lead to more capability. But training volume does not predict whether teams can respond effectively when AI systems encounter edge cases.

A more meaningful indicator is an organization’s ability to compress the time between an unexpected risk event and a correct adaptive response, without sacrificing decision quality. In my work, this emerging concept—call it decision-response latency—has proven far more predictive of AI-era performance than training completions or certification rates.

Most organizations don’t measure this at all. They track what’s easy to quantify, not what determines resilience.

The Human+ Capability Every Risk-Aware Enterprise Needs

Over 27 years and across industries, a consistent pattern has emerged: organizations that succeed with AI invest not only in operational proficiency, but in what I call Human+ capability—the integrated capacity that emerges when a specific set of foundational, integrative, and adaptive skills are developed together.

Human+ capability builds across three layers: operational baseline (enabling people to safely work with intelligent systems), integration capability (enabling teams to coordinate across processes, systems, and functions), and adaptive leadership (enabling leaders to navigate novel, high-stakes situations with incomplete information).

Human+ is not a single competency. It is an organizational capability—the ability to interpret unexpected signals, adjust processes dynamically, and maintain resilience when algorithmic behavior deviates from expectations. If organizations or teams collectively posess this set of skills, it may prevent small anomalies from escalating into systemic failure. As Forbes noted in its analysis of this year’s Nobel Prize and the evolving landscape of AI risk, the organizations that pair deep human judgment with advanced automation are the ones positioned to remain resilient (Carvão, 2025).

Until an enterprise builds this capability, AI investments tend to underperform or introduce new vulnerabilities.

The Risk Rebalancing That Generates ROI

Consider one industrial organization that rebalanced training investment from an 80/20 operational-heavy distribution to a structure that placed meaningful weight on cross-functional integration and adaptive leadership.

Within months, they noted fewer unplanned operational disruptions and faster, more confident decision-making when intelligent systems behaved unpredictably. The improvement did not come from new AI. It came from new capability—and measurable gains in operational resilience—from teams who could orchestrate the AI they already had.

This is what executives consistently underestimate: the return on AI is a function of the organization’s ability to adapt when the AI doesn’t have the answer.

Monday Morning Action for Executives

AI deployment is no longer an IT initiative. Workforce capability is no longer an HR initiative. Both are now enterprise-risk issues.

Every intelligent system you deploy creates new failure modes. Your competitive advantage lies in whether teams can detect, diagnose, and act when those systems encounter situations they were never trained on.

This capability—Human+ orchestration—is the difference between AI as a productivity multiplier and AI as a strategic liability. In a world where intelligent systems routinely encounter situations outside their training data, your organization’s ability to respond is now a core dimension of enterprise risk. Those that develop Human+ capability will convert AI into advantage; those that don’t will inherit escalating vulnerabilities.

Trond Undheim is the author of “The Platinum Workforce” (Anthem Press, November 25). He previously served as a Research Scholar at Stanford’s Center for International Security and Cooperation, focusing on workforce capability under systemic stress.

Source: https://www.forbes.com/sites/trondarneundheim/2025/11/24/the-hidden-50000-per-hour-gap-in-your-ai-risk-management/