The Five Agentic AI Hurdles Companies Will Face in 2026

This has been the year of AI hype. While GenAI is already over the hill on the Gartner Hype Cycle for Emerging Technologies, Agentic AI is reaching the peak, and represents a dramatic leap forward in AI technology – combining reasoning and autonomy. Agentic AI-driven solutions have the potential to improve efficiency, provide better insights, creativity, precision and more. Companies are getting behind industry standards for agentic AI interoperability and there are reports of companies dramatically altering their strategies to upgrade their business using agentic AI solutions.

However, AI burnout from the hype that started with generative AI is already starting to creep in. Gartner calls this period the “trough of disillusionment” and some emerging technologies die in this stage. However, agentic AI most certainly will emerge triumphantly. It is too powerful, accessible and valuable for companies to give up. However, there are real hurdles that our industry faces in 2026 to get the maximum value from AI agents in digital advertising. Here are five that my team is focused on.

Data Quality Increases in Importance

Digital advertising is an industry built on data. At the same time, it is challenging to evaluate the quality of essential data, including audience data, inventory placement data, and contextual data. Brands constantly fight for higher quality signals and more reliable data to reach audiences on quality placements.

Inaccurate data can derail an agentic AI media strategy. The saying “garbage in garbage out” is very real and something advertisers need to actively avoid. We should not neglect our effort to find and use quality data, process it efficiently, clean it, analyze it thoroughly, and understand it before granting the agent access to it for decision-making and action-taking. Simply using AI agents won’t help companies escape from bad data or bad outcomes if bad data is used, but it’s worth noting that using AI agents will make it easier to analyze and identify bad data.

Data Privacy and Security Becomes Even More Serious

The AI agents rely on large volumes of data, including user engagement patterns, campaign performance metrics, and contextual and behavioral signals, to make informed decisions. It is essential to protect this data at all times. On the security level, an example of a growing threat is prompt injection, where malicious or unintended inputs can manipulate an AI model’s behavior, potentially exposing confidential information or triggering unintended agentic actions.

To address these issues, organizations need to integrate privacy and security measures directly into the design of their AI agent infrastructure. This process begins by selecting large language models (LLMs) from providers’ accounts where terms and conditions explicitly state that the data will not be retained or used for model training purposes. Additionally, companies should establish a robust authentication ecosystem to ensure that every interaction between agents and systems is verified and properly authorized.

During the process, the research and development team should involve other teams from the organization, such as Legal and Security, who can assist with risk assessment and offer early advisory support.

The Supply Chain Experiences Error Amplification

In agentic AI systems, each component, ranging from perception and planning to reasoning and execution, functions like a link in a supply chain. While each part may achieve high accuracy independently, the probabilistic nature of these processes means that minor inaccuracies can accumulate across steps. By the time a task passes through multiple decision layers, the overall system accuracy can significantly decrease.

Beyond technical degradation, the amplification of errors poses a serious challenge to user trust. When an AI agent provides inconsistent or incomprehensible outcomes, users tend to view the system as unreliable.

This is why it is crucial to integrate guardrails, prompt tracking, data logging and monitoring, along with feedback loops, into the architecture. These elements can help AI-driven advertising agents achieve both operational efficiency and trustworthiness.

It is also hard to evaluate if AI agent responses are accurate so it’s important to implement a lot of tests inside the process (manual and automated). Incorporating a human-in-the-loop is essential, especially at the initial stages. Right now AI is being talked about as an autonomous solution, but it is best thought of as a co-pilot, with a human pilot keeping the plane flying straight. And like all pilots, co-pilots need a lot of training to build skills and trust.

AI Will Not Replace AdTech Overnight

Like any mature industry, a transformative technology won’t replace legacy technology overnight. One relevant example is linear vs. streaming TV. Millions of people have access to streaming, but linear still attracts the majority of viewers for premier events like The Super Bowl and The World Cup. After two decades of digital video technology, we are just now seeing legacy TV companies start to build for streaming, while having to also manage linear for the foreseeable future.

Digital advertising is similar. Advertisers and agencies have entrenched relationships with DSPs, cloud data providers and platforms. Publishers have many integrations that they rely on for demand. Simply adding an AI agent to a workflow will not replace this infrastructure overnight. It will take time for most brands and publishers to embrace AI completely.

Humans Will Shift Their Responsibilities

Every player in the industry – from brands to agencies, tech companies and publishers – will have to determine where AI replaces work that people do today and where AI enhances work that people do today. Some very laborious work which often require significant staffing, could become much more automated with smaller teams overseeing AI tools.

Other work including media strategy and delivery, creative design, campaign optimization and analysis will require humans to shift their approach, but still pilot the process. People will find that they are expected to increase their output, use more data, or use more complex processes. The best outcome is that people using AI feel empowered to do more interesting work, but this will only happen if companies create a mandate to embrace AI as a strategic opportunity rather than simply a time saving tool.

Part of this last challenge is managing a host of reactions within the company. Some people are early adopters and will rush in too quickly, racing risks. Other people will be skeptical and resist adopting new technologies. Companies need a realistic plan for managing both scenarios, which are both inevitable.

AI is not just another shiny object – it will survive the hype cycle. We all need to invest in solving the challenges that arise as AI agents start to enter our market in earnest next year so we avoid risks and enjoy the efficiency and power that AI agents could bring to digital advertising.

You May Also Like

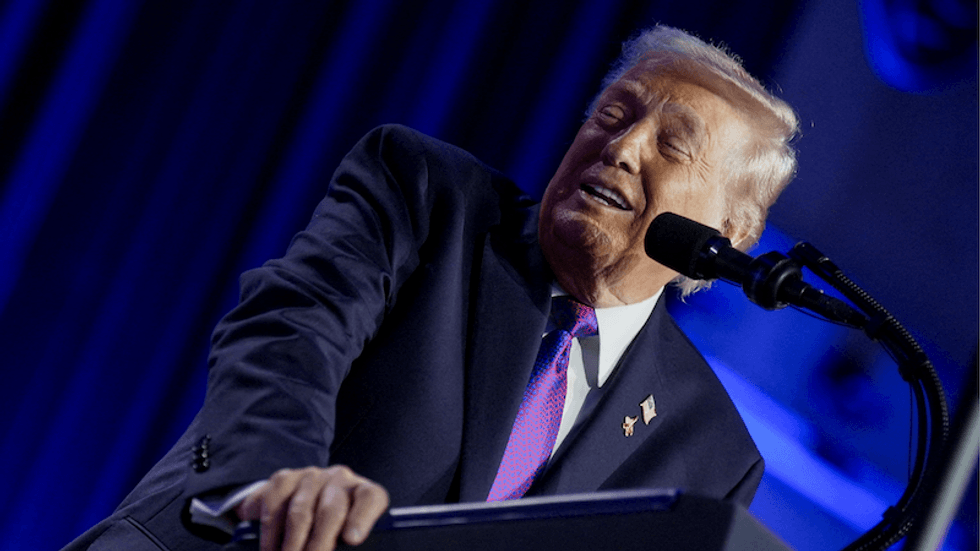

Trump roasts Mike Johnson for saying grace at prayer event: 'Excuse me, it's lunch!'

Where Can You Turn $1,000 Into $5,000 This Week? Experts Point Towards Remittix As The Best Option