The Future of Crypto Transactions? AI That Predicts Network Congestion

Table of Links

Abstract and 1. Introduction

- Preliminaries

- Problem definition

- BtcFlow

- Bitcoin Core (BCore)

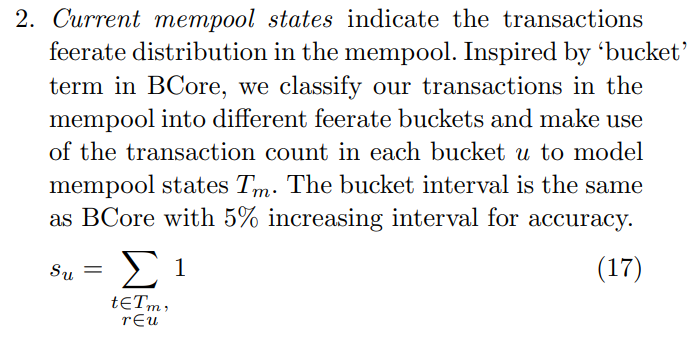

- Mempool state and linear perceptron machine learning (MSLP)

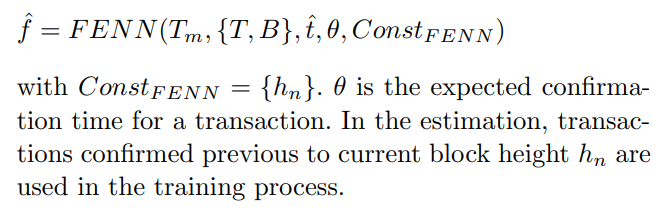

- Fee estimation based on neural network (FENN)

- Experiments

- Conclusion, Acknowledgements, and References

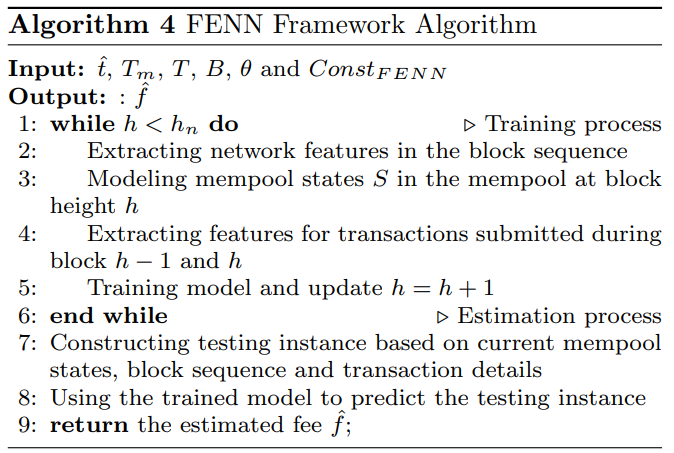

7 Fee estimation based on neural network (FENN)

ue to the low block capacity, the majority of submitted transactions may experience various confirmation delays. Transactions are selected and added to the miner’s mempool after submission, where they compete for confirmation in the next block. A transaction is considered complete when it is recorded in a block in the blockchain. In the confirmation process, transaction fees are considered as an incentive to confirm transactions into the blockchain. To sum up, we summarize three groups of features that may influence the transaction confirmation:

\ – Transaction features, which describes the submitted transaction.

\ – Mempool states, which records the distribution of feerates of unconfirmed transactions in the mempool, implicitly modelling the competition among unconfirmed transactions.

\ – Network features, which reflects the characteristics of the mined blocks including block size, block generation speed, etc.

\ These three groups of features correspond to the three types of information fed to the estimation function F in Section 3. Although transaction features are already available in the submitted transaction, network features and mempool states are not known. However, such features are desirable, because if we had known how many transactions would be contained in future blocks, how fast future blocks would be generated, how competitive the submitted transaction would be in future mempools, we would increase the chance to predict the confirmation fee more accurately. Consequently, in FENN, our main idea is to predict network features and mempool states from historical state sequences by utilizing sequence learning models. Finally, we combine the three groups of features to do the estimation.

\ The prediction procedure can be formulated based on its data resources:

\

7.1 Estimation procedure

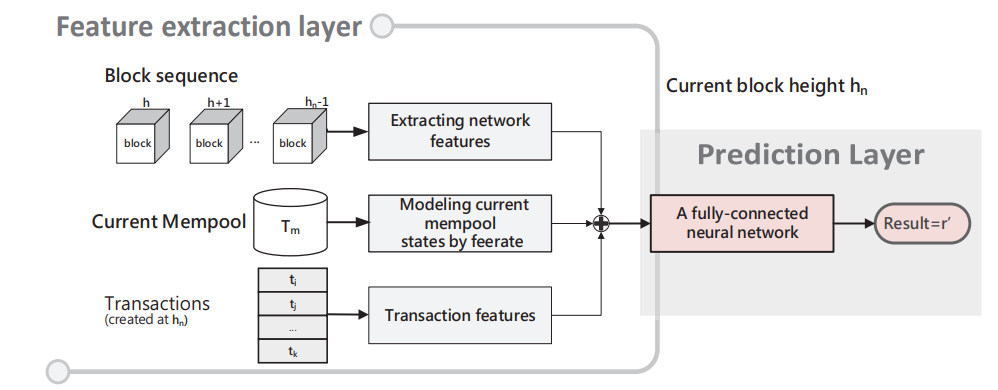

The estimation framework can be divided into two layers, one feature extraction layer to extract patterns from network features, mempool states and the submitted transaction itself, and one prediction layer to analyze the relationship between transaction fee and the extracted features. Fig. 4 shows the framework.

\ 7.1.1 Feature extraction layer

\ It includes three parts. Other than modelling the submitted transaction itself, the feature extraction layer also predicts the future characteristics of block states and model mempool competition states of the unconfirmed transactions.

\ 1. Transaction features contain information on the transaction that has been submitted and is awaiting confirmation. We pick features that we believe may affect a transaction’s validation and confirmation. The transaction vector contains:

\ – number of inputs, number of outputs Miners need to seek for the source transactions pointed to the new transaction’s inputs when confirming a transaction, which means that the number of transaction inputs and outputs affects the verification complexity.

\ – transaction version, transaction size and weight We use both transaction size of raw data and transaction weight to characterize transactions.

\ – transaction first seen time, confirmation timestamp and confirmation block height. The first-seen time refers to the time that a transaction is first observed. Because it’s difficult to determine the precise submission time of a historical transaction, we use the publicly available first-seen time.

\

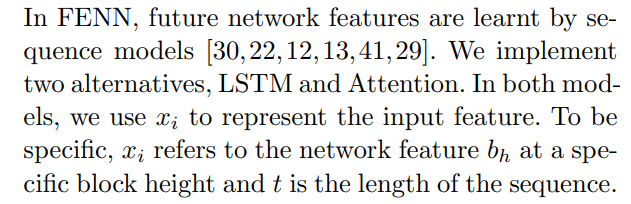

\ 3. Network features are expected to encode future block size and generation speed, which can affect a transaction’s confirmation time. Historical network features are learned as a sequence to predict future network features.

\ – block size, block weight and transaction count We use three factors to characterize the size of a block, namely, the overall size of transactions (Bytes), the overall weight of transactions (Weight) and the transaction count in a block.

\ – difficulty It reflects the mining difficulty in the Bitcoin system, which is tuned to maintain an average 10-minute block frequency.

\ – block time The mining time of this block. It reveals the block generation speed.

\ – average feerate in block The average feerate of all the transactions in the block. This indicator is designed to reveal the feerate trend in continuous blocks.

\

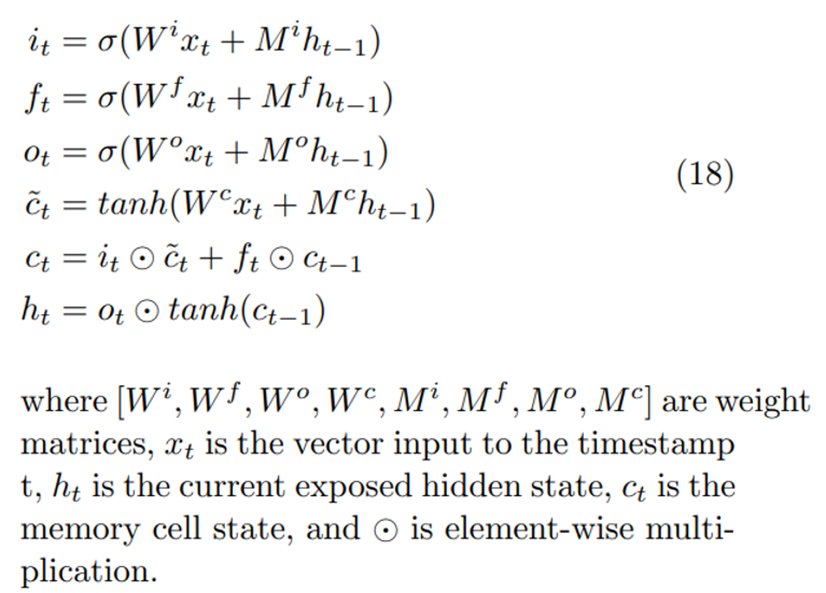

\ Approach 1: LSTM [16] extract patterns by aggregating information on a token-by-token basis in a sequential order and summarizes the sequence into a context vector. To be specific, at each time step, LSTM maintains a hidden vector h and a memory vector c responsible for state updates and output prediction [18], and the final state is used as the extracted patterns from the sequence in our models:

\

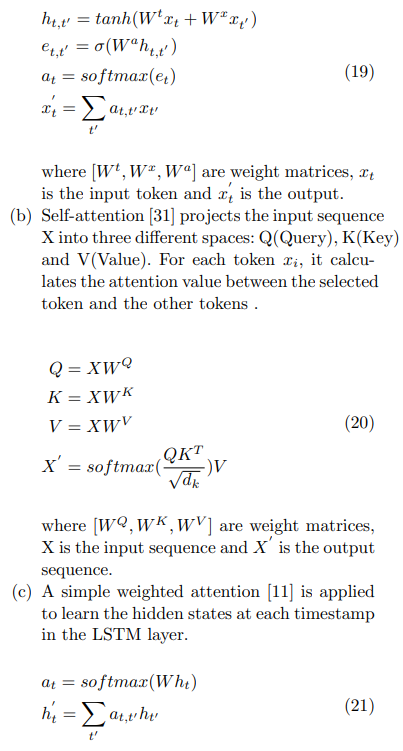

\ Approach 2: Attention is another popular timeseries processing technique. It simulates the cognitive process of selectively concentration on different parts in psychology. In other words, it returns a new representation vector related to the importance at various positions in the sequence. Three state-of-the-art attention modules are applied below:

\ (a) Additive attention [3] computes the compatibility function using a feed-forward network

\

\ with a single hidden layer.

\

\ where W is a weight matric, and h is the hidden states in the former LSTM processing stage.

\

\ 7.1.2 Prediction layer

\ After aggregating inputs from the feature extraction layer, FENN is followed by a fully-connected neural network. By learning the relationship among historical block information, mempool data, and transaction details, FENN can provide a specific estimated feerate for each transaction. The testing instance of the estimated transaction consists of three parts: the block sequence, current mempool states and the transaction itself.

\

:::info Authors:

(1) Limeng Zhang, Swinburne University of Technology, Melbourne, Australia (limengzhang@swin.edu.au);

(2) Rui Zhou Swinburne, University of Technology, Melbourne, Australia (rzhou@swin.edu.au);

(3) Qing Liu, Data61, CSIRO, Hobart, Australia (q.liu@data61.csiro.au);

(4) Chengfei Liu, Swinburne University of Technology, Melbourne, Australia (cliu@swin.edu.au);

(5) M.Ali Babar, The University of Adelaide, Adelaide, Australia (ali.babar@adelaide.edu.au).

:::

:::info This paper is available on arxiv under CC0 1.0 UNIVERSAL license.

:::

\

You May Also Like

Aave DAO to Shut Down 50% of L2s While Doubling Down on GHO

The "1011 Insider Whale" has added approximately 15,300 ETH to its long positions in the past 24 hours, bringing its total account holdings to $723 million.