Decentralized AI: Architecture, Protocol Use Cases, and Web3 Applications

Artificial intelligence is no longer an experimental layer in Web3. Today, AI systems actively monitor protocols, detect anomalies, analyze governance proposals, automate operational workflows, and enrich analytics across blockchains. As these systems mature, they increasingly operate alongside decentralized applications that are global, multi-chain, and designed to run continuously without centralized control, a model that depends on reliable underlying Web3 infrastructure.

This creates a structural tension. Most AI systems still rely on centralized cloud platforms that concentrate execution in a few regions and vendors. These assumptions often conflict with how Web3 systems operate at scale. This mismatch has driven growing interest in decentralized AI, particularly among teams building production Web3 applications.

Decentralized AI does not mean training machine learning models on blockchains. Instead, it refers to decentralizing specific layers of the AI stack such as coordination, incentives, data ownership, and execution placement, while keeping computation offchain and production-ready. Understanding decentralized AI correctly requires starting from what real protocols are building today.

Table of Content

- What Web3 AI Protocols Are Actually Building

- Decentralized AI marketplaces and agent coordination

- Decentralized model competition and training incentives

- Decentralized data ownership for AI

- What decentralized AI actually means

- Decentralized AI architecture in practice

- AI agents as the interface between AI and Web3 systems

- Final thoughts

- Frequently Asked Questions About Decentralized AI

- About Onfinality

What Web3 AI Protocols Are Actually Building

The decentralized AI ecosystem is not a single technology or network. Different protocols focus on decentralizing different parts of the AI lifecycle. Some decentralize how AI services are discovered, others decentralize how models improve, and others focus on decentralizing access to data.

These projects collectively form what is now referred to as deAI infrastructure, even though they solve very different problems within the AI stack.

Decentralized AI use cases in Web3 including monitoring, risk analysis, governance intelligence, analytics, and simulations

Decentralized AI use cases in Web3 including monitoring, risk analysis, governance intelligence, analytics, and simulations

Decentralized AI marketplaces and agent coordination

SingularityNET is best understood as a decentralized marketplace for AI services and autonomous agents rather than a system that runs AI on-chain. Developers can publish AI capabilities such as models, APIs, or agents, while other applications can discover, compose, and consume these services without relying on a centralized intermediary.

What is decentralized here is coordination, discovery, and incentives. Execution remains offchain, but service composition and access are handled through decentralized mechanisms. This design aligns closely with emerging Web3 AI infrastructure, where intelligence is modular and reusable across protocols.

This approach enables composable AI agents that can interact across ecosystems without a single provider controlling access to intelligence.

Decentralized model competition and training incentives

Bittensor focuses on decentralizing how AI models are evaluated and improved over time. In traditional machine learning systems, a single organization controls training pipelines and performance evaluation. Bittensor replaces this with an open network where models compete and are rewarded based on usefulness.

Participants contribute models that perform inference or learning tasks. The network continuously ranks outputs and allocates incentives accordingly. Training and inference occur offchain, but coordination and incentives are decentralized.

This model represents an important component of decentralized AI infrastructure, where intelligence improves through open competition rather than centralized orchestration.

Decentralized data ownership for AI

High-quality data remains one of the largest constraints in AI development. Most training datasets today are controlled by centralized platforms with limited transparency around consent and access. Vana addresses this issue by decentralizing data ownership itself.

Users contribute data to decentralized data pools while retaining control over how that data can be accessed and used. AI models can request permissioned access without relying on a central custodian. This makes it possible to train AI systems on user-owned data while preserving privacy and incentive alignment.

Data coordination is a foundational layer of deAI infrastructure, and decentralizing it unlocks new AI development models that centralized platforms struggle to support.

What decentralized AI actually means

Viewed through these protocol use cases, decentralized AI can be defined precisely. It refers to AI systems where one or more layers of the AI stack are decentralized using cryptoeconomic coordination, while computation itself remains offchain.

The decentralized layers may include data ownership, model evaluation, service discovery, agent coordination, or execution placement across distributed compute providers. Blockchains act as coordination layers rather than execution environments.

This framing is essential for understanding decentralized AI architecture in production systems.

Decentralized AI architecture in practice

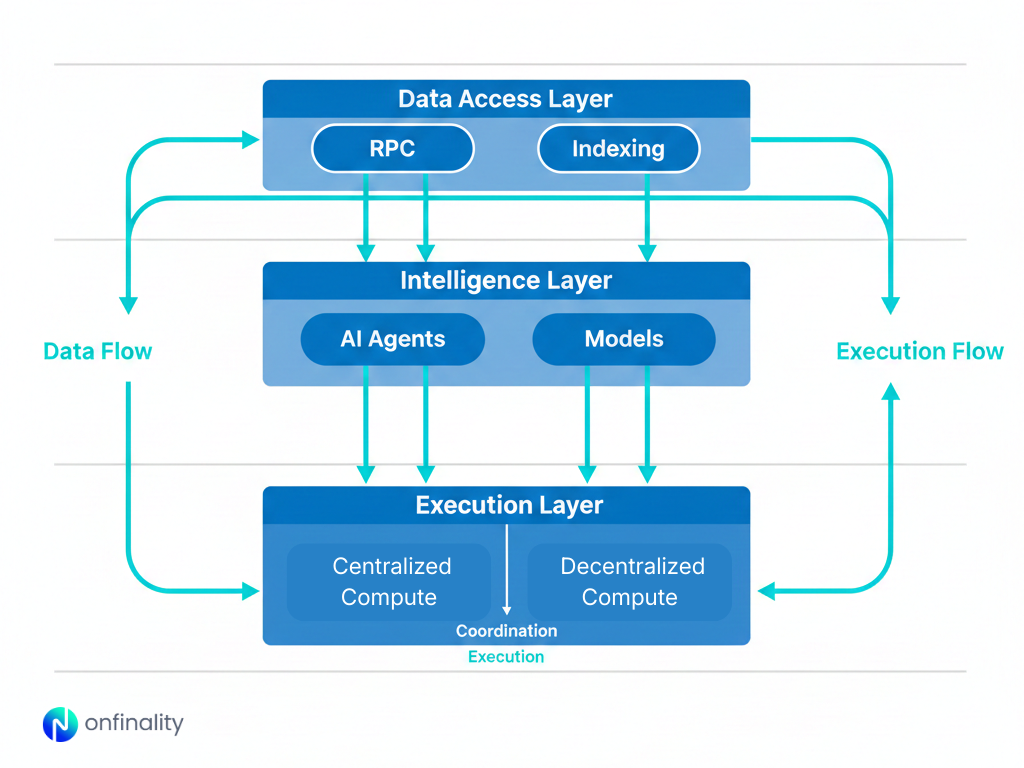

In real deployments, decentralized AI architecture separates concerns across three layers.

The data access layer provides reliable access to onchain state and historical data through managed RPC endpoints and indexing services. This layer is latency-sensitive and forms the foundation of modern Web3 AI infrastructure.

The intelligence layer consists of AI agents and models that analyze data, generate insights, and support decisions. These agents power monitoring, analytics, simulations, and automation.

The execution layer runs AI workloads offchain. Depending on workload requirements, execution may be centralized, decentralized, or hybrid. Decentralized execution works particularly well for batch inference, simulations, analytics, and background agents that benefit from elastic capacity.

Together, these layers form a practical and scalable decentralized AI architecture.

AI agents as the interface between AI and Web3 systems

AI agents are the most visible implementation of decentralized AI today. An agent continuously observes onchain and offchain data, runs inference or reasoning, and triggers actions such as alerts, reports, governance recommendations, or automated transactions.

Decentralized AI protocols enhance agents by enabling marketplaces for agent capabilities, incentivizing high-performing models, supporting training on decentralized data sources, and allowing execution to shift across distributed compute networks. In practice, most agents rely on hybrid execution models that balance reliability with flexibility.

AI agents represent the operational layer where deAI infrastructure directly meets Web3 applications.

Final thoughts

Today, decentralized AI is already used for protocol monitoring, anomaly detection, risk analysis for DeFi systems, governance intelligence, simulations and stress testing, analytics enrichment, and autonomous operational agents.

These use cases benefit from distributed execution and reduced dependency risk without requiring ultra-low latency. As a result, they are well-suited to decentralized execution models within broader Web3 AI stacks.

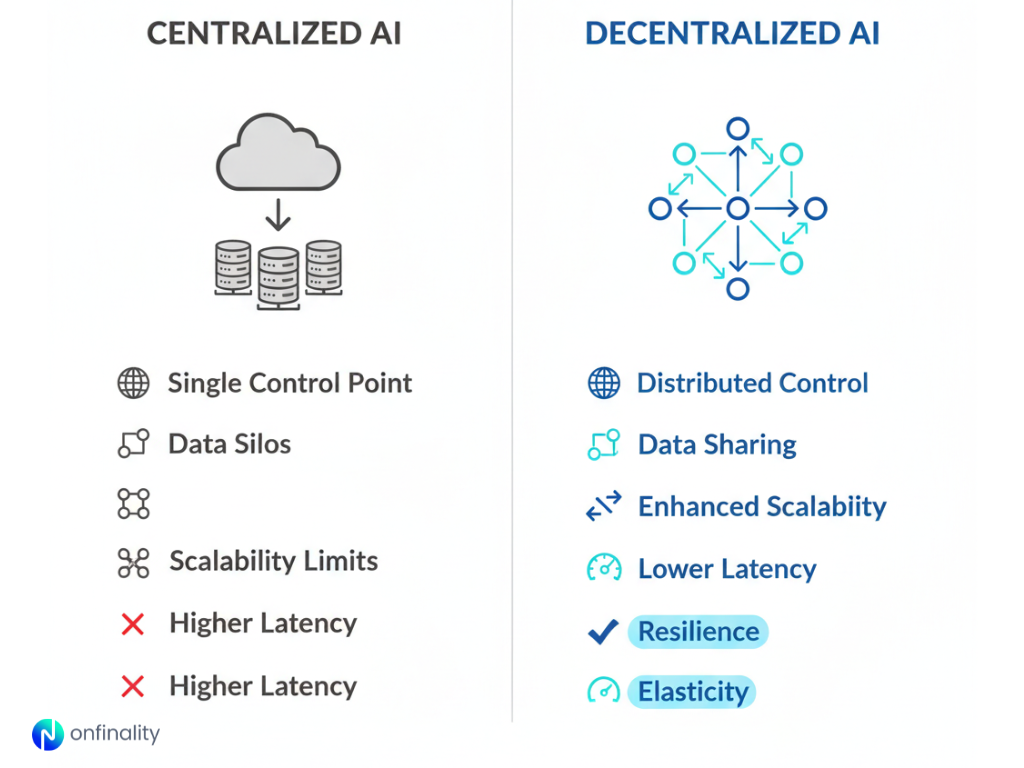

Centralized AI vs decentralized AI in production

Centralized AI and decentralized AI solve different problems. Centralized AI remains optimal for ultra-low latency inference and tightly coupled user interfaces. Decentralized AI performs best for background agents, analytics, simulations, and workloads where elasticity and resilience matter more than strict latency guarantees.

Most production systems combine both approaches, selecting execution models based on workload characteristics rather than ideology.

Adoption patterns and limitations

Teams typically adopt decentralized AI incrementally. They begin with non-critical workloads such as analytics or batch inference, then expand to continuous monitoring and automation once reliability and cost benefits are validated. Centralized fallbacks remain in place to manage operational risk.

Decentralized AI is not suitable for every use case. Real-time user-facing inference, highly stateful systems, and regulated workloads with strict data residency requirements often remain centralized.

Final thoughts

Decentralized AI is not about running machine learning on blockchains. It is about decentralizing coordination, incentives, and access across the AI stack while keeping execution practical and offchain.

In 2026, the strongest Web3 architectures combine reliable data access, AI agents as intelligence layers, and a mix of centralized and decentralized execution. For teams building at scale, decentralized AI is now a core infrastructure consideration rather than a theoretical concept.

Frequently Asked Questions About Decentralized AI

What is decentralized AI?

Decentralized AI refers to AI systems where coordination, incentives, data ownership, or execution placement are decentralized, while model training and inference remain offchain. It enables scalable and resilient AI for Web3 applications.

How is decentralized AI different from centralized AI?

Centralized AI relies on a single provider for execution and coordination. Decentralized AI distributes parts of the AI stack across networks, reducing dependency risk and improving flexibility for non-latency-critical workloads.

Are AI agents part of decentralized AI?

Yes. AI agents are a primary way decentralized AI is used in practice. They consume blockchain data, run inference offchain, and execute actions using centralized, decentralized, or hybrid infrastructure.

About Onfinality

OnFinality is a blockchain infrastructure platform that serves hundreds of billions of API requests monthly across more than 130 networks, including Avalanche, BNB Chain, Cosmos, Polkadot, Ethereum, and Polygon. It provides scalable APIs, RPC endpoints, node hosting, and indexing tools to help developers launch and grow blockchain networks efficiently. OnFinality’s mission is to make Web3 infrastructure effortless so developers can focus on building the future of decentralised applications.

App | Website | Twitter | Telegram | LinkedIn | YouTube

You May Also Like

Trading time: Tonight, the US GDP and the upcoming non-farm data will become the market focus. Institutions are bullish on BTC to $120,000 in the second quarter.

Ethereum Fusaka Upgrade Set for December 3 Mainnet Launch, Blob Capacity to Double