Why I Built Allos to Decouple AI Agents From LLM Vendors

Introducing Allos: A Python SDK for building AI agents that can switch between OpenAI, Anthropic, and more with a single command.

The Frustration That Sparked a Project

If you've spent any time building with Large Language Models, you've probably felt the same frustration I did. You start a project with, say, OpenAI's API. Your agent is working perfectly. Then, Anthropic releases a new Claude model that's faster, cheaper, or better at reasoning for your specific use case.

What do you do?

The painful reality is that you're facing a significant rewrite. The tool-calling formats are different. The message structures are different. The entire client library is different. You're locked in.

I found myself wrestling with complex frameworks that promised flexibility but delivered a mountain of boilerplate, or simple frameworks that chained me to a single provider. I spent more time fighting abstractions than actually building my agent.

I thought: "There has to be a better way."

That's why I built Allos.

Introducing Allos: Freedom to Choose

Today, I'm launching Allos v0.0.1, an open-source, MIT-licensed agentic SDK for Python that I built to solve this exact problem.

Allos (from the Greek ἄλλος, meaning "other" or "different") is built on a single philosophy: developers deserve the freedom to choose the best model for each job, without penalty.

With Allos, you write your agent's logic once. Switching the underlying "brain" from GPT-4o to Claude 3.5 Sonnet is as simple as changing a single command-line flag. No code changes. No headaches.

See It in Action: 0 to a Full App in Under 4 Minutes

Talk is cheap. Here's a video of Allos building a complete, multi-file FastAPI application—including a database, tests, and a README—from a single prompt, and then switching providers on the fly.

https://youtu.be/rWc-8awcAJo?embedable=true

How It Works: Simplicity by Design

Allos isn't just another heavy abstraction layer. It's a production-ready toolkit designed with a fanatical focus on developer experience.

A Polished and Powerful CLI

The allos CLI is the heart of the experience. You can go from an idea to a running application in minutes.

# Create a complete application from a single prompt allos "Create a REST API for a todo app with FastAPI, SQLite, CRUD operations, and tests."

\

Truly Provider Agnostic

This is the core promise. Use the best model for every job.

# Start with OpenAI allos --provider openai "Refactor this code for readability." # Switch to Anthropic for deeper reasoning allos --provider anthropic --session refactor.json "Continue the analysis." # Run locally with Ollama (coming soon!) allos --provider ollama "Same task, offline."

\

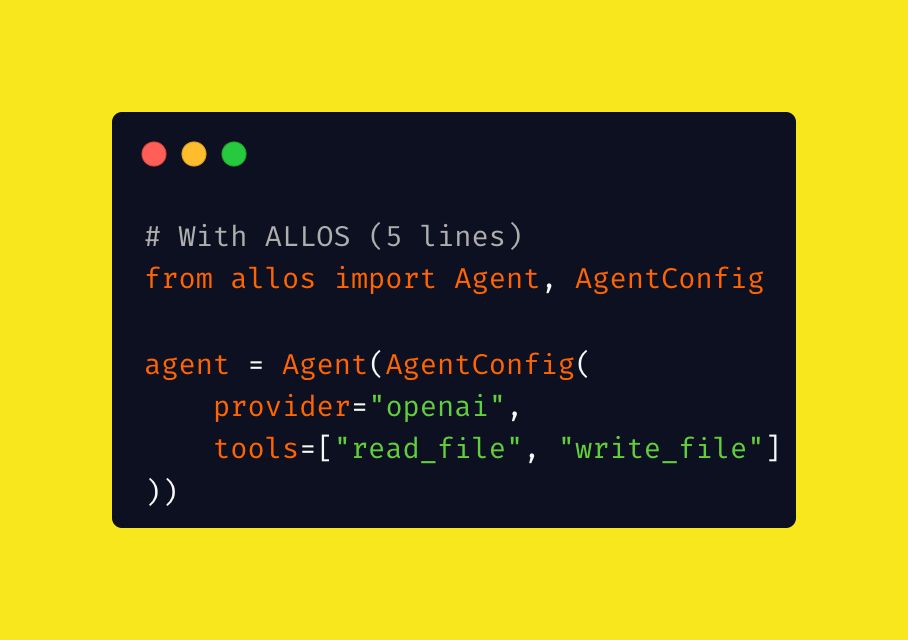

Less Code, More Power

Frameworks should get out of your way. Allos is designed to be minimal and intuitive.

\

\

Key Features in v0.0.1

- Secure Tools: Built-in tools for filesystem I/O and shell execution, with a human-in-the-loop permission system by default.

- Session Management: Never lose your progress. The

--sessionflag saves your entire conversation context. - Extensible by Design: Creating your own custom tools is as simple as writing a Python class with a

@tooldecorator. - Built for Quality: 100% unit test coverage, a full integration suite, and production-ready error handling.

The Roadmap: Building an Open Ecosystem

This MVP with OpenAI and Anthropic support is just the beginning. The public roadmap is driven by what the community needs next:

- 🔌 Ollama Support: For running local models.

- 🌐 Web Tools: Built-in

web_searchandweb_fetch+ MCP!. - 🧠 More Providers: Google Gemini, Cohere, and others.

- 🤖 More Agentic Frameworks: smolagents, Pydantic AI, and more.

Get Started in 2 Minutes

Step 1: Install

uv pip install "allos-agent-sdk[all]"

Step 2: Configure

export OPENAI_API_KEY="your_key_here"

Step 3: Run!

allos "Create a simple FastAPI app in main.py and tell me how to run it."

Join the Movement

Allos is more than a tool—it's a bet on an open, flexible future for AI development. If you believe developers should be free to choose the best model without vendor lock-in, I'd love your support.

- ⭐ Star on GitHub: https://github.com/Undiluted7027/allos-agent-sdk

- 📖 Read the Docs: https://github.com/Undiluted7027/allos-agent-sdk/tree/main/docs

- 💬 Join the Discussion: Share ideas, ask questions, and help shape the roadmap.

Allos is built in the open, for the community. I'm incredibly proud of this first release and can't wait to see what you build with it. Thanks for reading.

You May Also Like

Essential Guide: Bithumb’s POL Suspension for Crucial Network Upgrade

What The CHESS, DF, GHST Hold-Up Means For You

![QQQ short term cycle nearing end; pullback likely to attract buyers [Video]](https://i0.wp.com/editorial.fxsstatic.com/images/i/Equity-Index_Nasdaq-2_Medium.jpg)