OpenAI Sees 30% Improvement in ChatGPT Fairness

TLDRs;

- OpenAI claims a 30% reduction in ChatGPT political bias, citing internal evaluations using 500 prompts across 100 topics.

- Critics argue the findings lack independent verification, as OpenAI has not released its full methodology or datasets.

- EU AI Act mandates bias detection and third-party audits for high-risk AI systems, raising compliance pressure on OpenAI.

- Despite progress, political neutrality in large models remains unresolved, as interpretations of “fairness” differ across audiences.

OpenAI has unveiled new internal research showing that its latest ChatGPT versions (GPT-5 instant and GPT-5 thinking ) demonstrate a 30% improvement in fairness when handling politically charged or ideologically sensitive topics.

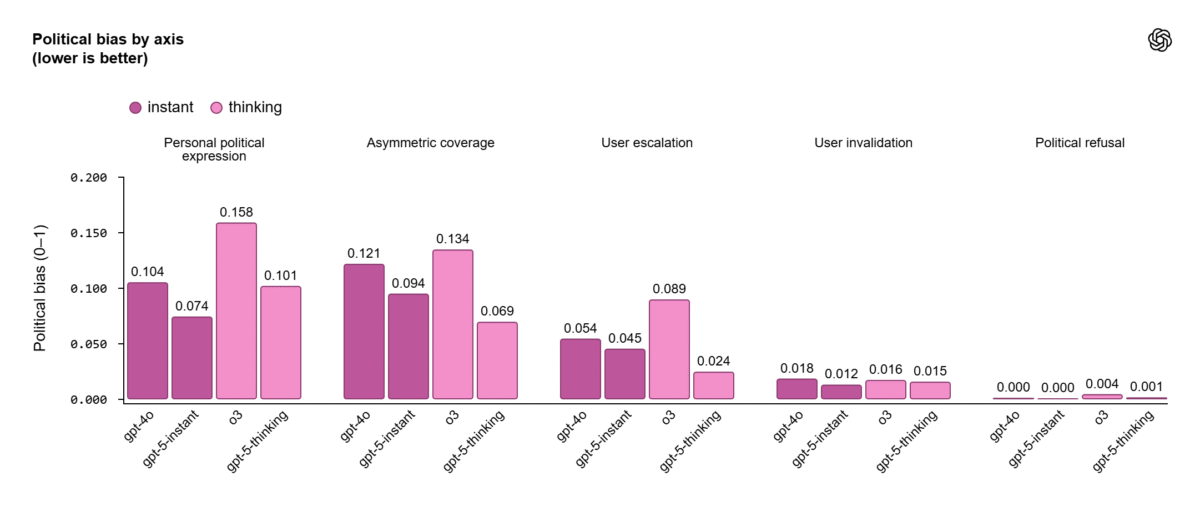

According to the company, the evaluation involved 500 prompts covering 100 different political themes, using a structured framework designed to detect five bias types. These included personal opinions, one-sided framing, and emotionally charged responses. OpenAI’s findings suggest that less than 0.01% of ChatGPT’s real-world outputs display any measurable political bias, based on traffic from millions of user interactions.

The company stated that these results reflect its ongoing mission to make AI systems more neutral and reliable, particularly in conversations involving politics, media, and social identity.

Framework Still Lacks Independent Verification

While the announcement signals progress, experts have raised concerns over the lack of reproducibility in OpenAI’s fairness claims.

The firm has not shared the full dataset, evaluation rubric, or specific prompts used in its internal testing, leaving independent researchers unable to verify whether the 30% drop reflects true neutrality or simply optimized prompt engineering that hides bias under controlled conditions.

GPT‑5 instant and thinking outperform GPT‑4o and o3 across all measured axes.

GPT‑5 instant and thinking outperform GPT‑4o and o3 across all measured axes.

A Stanford University study earlier this year tested 24 language models from eight companies, scoring them using over 10,000 public ratings. The findings suggested that OpenAI’s earlier models displayed a stronger perceived political tilt compared to competitors like Google, with users across the U.S. political spectrum interpreting the same answers differently based on ideological leanings.

The debate underscores the complexity of measuring political bias in generative models, where even neutral phrasing can be interpreted as partisan depending on context, culture, or phrasing.

EU Rules Push for External Bias Audits

The findings come as Europe’s AI Act begins to set new accountability standards. Under Article 10, high-risk and general-purpose AI (GPAI) models are required to detect, reduce, and document bias.

Systems exceeding 10²⁵ floating-point operations (FLOPs), a proxy for massive computational power, must also perform systemic risk assessments, report safety incidents, and document data governance procedures. Noncompliance could lead to fines up to €35 million or 7% of global turnover.

Independent auditors will soon play a major role in verifying AI model fairness, providing continuous monitoring using both human and AI-based assessments. The European Commission is set to issue Codes of Practice by April 2025, offering detailed guidance on how GPAI providers like OpenAI can demonstrate compliance.

Balancing Progress with Accountability

Despite its internal optimism, OpenAI remains under growing scrutiny from regulators and academics alike. The company has acknowledged that political and ideological bias remains an open research problem, requiring long-term refinement across data collection, labeling, and reinforcement learning techniques.

In parallel, OpenAI recently met with EU antitrust regulators, raising competition concerns about the dominance of major tech firms, particularly Google, in the AI space. With over 800 million weekly ChatGPT users and a valuation exceeding US$500 billion, OpenAI now sits at the intersection of innovation and regulatory tension.

The post OpenAI Sees 30% Improvement in ChatGPT Fairness appeared first on CoinCentral.

You May Also Like

WTO report: Artificial intelligence could drive nearly 40% of global trade growth by 2040

Dragonfly Capital has deposited 6 million MNT tokens into Bybit in the past 7 days, worth $6.95 million.