The Time Series Optimized Transformer Setting New Standards in Observability

:::info Authors:

(1) Ben Cohen (ben.cohen@datadoghq.com);

(2) Emaad Khwaja (emaad@datadoghq.com);

(3) Kan Wang (kan.wang@datadoghq.com);

(4) Charles Masson (charles.masson@datadoghq.com);

(5) Elise Rame (elise.rame@datadoghq.com);

(6) Youssef Doubli (youssef.doubli@datadoghq.com);

(7) Othmane Abou-Amal (othmane@datadoghq.com).

:::

Table of Links

- Background

- Problem statement

- Model architecture

- Training data

- Results

- Conclusions

- Impact statement

- Future directions

- Contributions

- Acknowledgements and References

Appendix

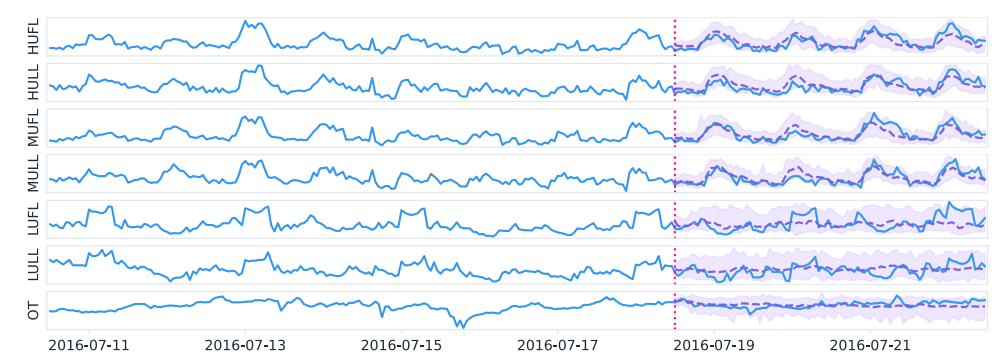

\ This technical report describes the Time Series Optimized Transformer for Observability (Toto), a new state-ofthe-art foundation model for time series forecasting developed by Datadog. In addition to advancing the state of the art on generalized time series benchmarks in domains such as electricity and weather, this model is the first general-purpose time series forecasting foundation model to be specifically tuned for observability metrics.

\ Toto was trained on a dataset of one trillion time series data points – the largest among all currently published time series foundation models. Alongside publicly available time series datasets, 75% of the data used to train Toto consists of fully anonymous numerical metric data points from the Datadog platform.

\ In our experiments, Toto outperforms existing time series foundation models on observability data. It does this while also excelling at general-purpose forecasting tasks, achieving state-of-the-art zero-shot performance on multiple open benchmark datasets.

\ In this report, we detail the following key contributions:

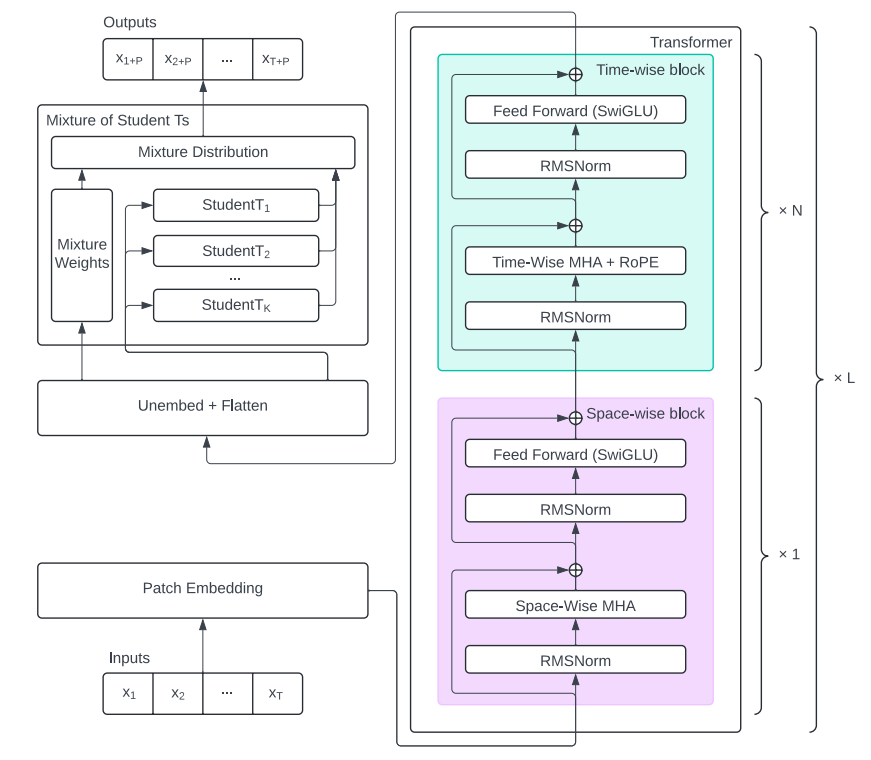

\ • Proportional factorized space-time attention: We introduce an advanced attention mechanism that allows for efficient grouping of multivariate time series features, reducing computational overhead while maintaining high accuracy.

\ • Student-T mixture model head: This novel use of a probabilistic model that robustly generalizes Gaussian mixture models enables Toto to more accurately capture the complex dynamics of time series data and provides superior performance over traditional approaches.

\ • Domain-specific training data: In addition to general multi-domain time series data, Toto is specifically pre-trained on a large-scale dataset of Datadog observability metrics, encompassing unique characteristics not present in open-source datasets. This targeted training ensures enhanced performance in observability metric forecasting

\

1 Background

We present Toto, a groundbreaking time series forecasting foundation model developed by Datadog. Toto is specifically designed to handle the complexities of observability data, leveraging a state-of-the-art transformer architecture to deliver unparalleled accuracy and performance. Toto is trained on a massive dataset of diverse time series data, enabling it to excel in zero-shot predictions. This model is tailored to meet the demanding requirements of real-time analysis as well as compute and memory-efficient scalability to very large data volumes, providing robust solutions for high-frequency and high-dimensional data commonly encountered in observability metrics.

\ 1.1 Observability data

\ The Datadog observability platform collects a vast array of metrics across multiple subdomains, crucial for monitoring and optimizing modern infrastructure and applications. These metrics include infrastructure data such as memory usage, CPU load, disk I/O, and network throughput, as well as application performance indicators like hit counts, error rates, and

\

\ latency [1]. Additionally, Datadog integrates specific metrics from numerous SaaS products, cloud services, open-source frameworks, and other third-party tools. The platform allows users to apply various time series models to proactively alert on anomalous behavior, leading to a reduction in time to detection (TTD) and time to resolution (TTR) of production incidents [2].

\ The complexity and diversity of these metrics present significant challenges for time series forecasting. Observability data often requires high time resolution, down to seconds or minutes, and is typically sparse with many zero-inflated metrics. Moreover, these metrics can display extreme dynamic ranges and right-skewed distributions. The dynamic and nonstationary nature of the systems being monitored further complicates the forecasting task, necessitating advanced models that can adapt and perform under these conditions.

\ 1.2 Traditional models

\ Historically, time series forecasting has relied on classical models such as ARIMA, exponential smoothing, and basic machine learning techniques [3]. While foundational, these models necessitate individual training for each metric, presenting several limitations [4]. The need to develop and maintain separate models for each metric impedes scalability, especially given the extensive range of metrics in observability data. Moreover, these models often fail to generalize across different types of metrics, leading to suboptimal performance on diverse datasets [5, 6]. Continuous retraining and tuning to adapt to evolving data patterns further increase the operational burden. This scaling limitation has hindered the adoption of deep learning–based methods for time series analysis, even as they show promise in terms of accuracy [7].

\ 1.3 Foundation models

\ Large neural network-based generative models, often referred to as “foundation models,” have revolutionized time series forecasting by enabling accurate predictions on new data not seen during training, known as zero-shot prediction [8]. This capability significantly reduces the need for constant retraining on each specific metric, thus saving considerable time and computational resources. Their architecture supports the parallel processing of vast data volumes, facilitating timely insights essential for maintaining system performance and reliability [9, 10].

\ Through pretraining on diverse datasets, generative models exhibit strong generalization across various types of time series data. This enhances their robustness and versatility, making them suitable for a wide range of applications. Zero-shot predictions are particularly attractive in the observability domain, where the limitations of traditional methods are felt very acutely. The most common use cases for time series models within an observability platform like Datadog include automated anomaly detection and predictive alerting. It is challenging to scale classical forecasting methods to handle cloud-based applications that can be composed of many ephemeral, dynamically scaling components such as containers, VMs, serverless functions, etc. These entities tend to be both high in cardinality and short-lived in time. This limits the practicality of traditional time series models in two ways:

\ • First, the high cardinality and volume of data can make fitting individual models to each time series computationally expensive or even intractable. The ability to train a single model and perform inference across a wide range of domains has the potential to dramatically improve the efficiency, and thus the coverage, of an autonomous monitoring system.

\ • Second, ephemeral infrastructure elements often lack enough historical data to confidently fit a model. In practice, algorithmic alerting systems often require an adaptation period of days or weeks before they can usefully monitor a new metric. However, if the object being monitored is a container with a lifespan measured in minutes or hours, these classical models are unable to adapt quickly enough to be useful. Real-world systems thus often fall back to crude heuristics, such as threshold-based alerts, which rely on the domain knowledge of users. Zero-shot foundation models can enable accurate predictions with much less historical context, by aggregating and interpolating prior information learned from a massive and diverse dataset.

\ The integration of transformer-based models [11] like Toto into observability data analysis thus promises significant improvements in forecasting accuracy and efficiency. These models offer a robust solution for managing diverse, high-frequency data and delivering zero-shot predictions. With their advanced capabilities, transformer-based models represent a significant leap forward in the field of observability and time series analysis [12–14].

1.4 Recent work

\ The past several years have seen the rise of transformer-based models as powerful tools for time series forecasting. These models leverage multi-head self-attention mechanisms to capture long-range dependencies and intricate patterns in data.

\ To address the unique challenges of time series data, recent advancements have introduced various modifications to the attention mechanism. For example, Moirai [15] uses “any-variate” attention to model dependencies across different series simultaneously. Factorized attention mechanisms [16] have been developed to separately capture temporal and spatial (cross-series) interactions, enhancing the ability to understand complex interdependencies. Other models [17, 18] have used cross-channel attention in conjunction with feed-forward networks for mixing in the time dimension. Additionally, causal masking [19] and hierarchical encoding [16] can improve the efficiency and accuracy of predictions in time series contexts.

\ These innovative transformer-based models have demonstrated state-of-the-art performance on benchmark datasets [14], frequently surpassing traditional models in both accuracy and robustness. Their capacity to process high-dimensional data efficiently [20] makes them ideal for applications involving numerous time series metrics with varying characteristics, such as observability.

\ Even more recently, a number of time series “foundation models” have been released [15, 19, 21–24]. By pre-training on extensive, multi-domain datasets, these large models achieve impressive zero-shot prediction capabilities, significantly reducing the need for constant retraining. This paradigm is appealing for the observability context, where we constantly have new time series to process and frequent retraining is impractical.

2 Problem statement

At Datadog, our time series data encompasses a variety of observability metrics from numerous subdomains. These metrics present several challenges for existing forecasting models:

\ • High time resolution: Users often require data in increments of seconds or minutes, unlike many publicly-available time series datasets that are at hourly frequency or above.

\ • Sparsity: Metrics such as error counts often track rare events, resulting in sparse and zero-inflated time series.

\ • Extreme right skew: Latency measurements in distributed systems exhibit positive, heavy tailed distributions with extreme values at high percentiles.

\ • Dynamic, nonstationary systems: The behavior of monitored systems changes frequently due to code deployments, infrastructure scaling, feature flag management, and other configuration changes, as well as external factors like seasonality and user-behavior-driven trends. Some time series, such as those monitoring fleet deployments, can also have a very low variance, exhibiting a piecewise-constant shape.

\ • High-cardinality multivariate data: Monitoring large fleets of ephemeral cloud infrastructure such as virtual machines (VMs), containers, serverless functions, etc. leads to high cardinality data, with hundreds or thousands of individual time series variates, often with limited historical data for each group.

\ • Historical anomalies: Historical data often contains outliers and anomalies caused by performance regressions or production incidents.

\ Foundation models pre-trained on other domains struggle to generalize effectively to observability data due to these characteristics. To overcome this, we developed Toto, a state-of-the-art foundation model that excels at observability forecasting while also achieving top performance on standard open benchmarks.

\

:::info This paper is available on arxiv under CC BY 4.0 license.

:::

\

You May Also Like

‘He will pay!’ GOP commentator rages at Don Lemon over interrupting ‘God’s people’

Cardano Latest News, Pi Network Price Prediction and The Best Meme Coin To Buy In 2025