Unseen Workload Optimization: The Two-Phase IA2 Approach

Table of Links

Abstract and 1. Introduction

-

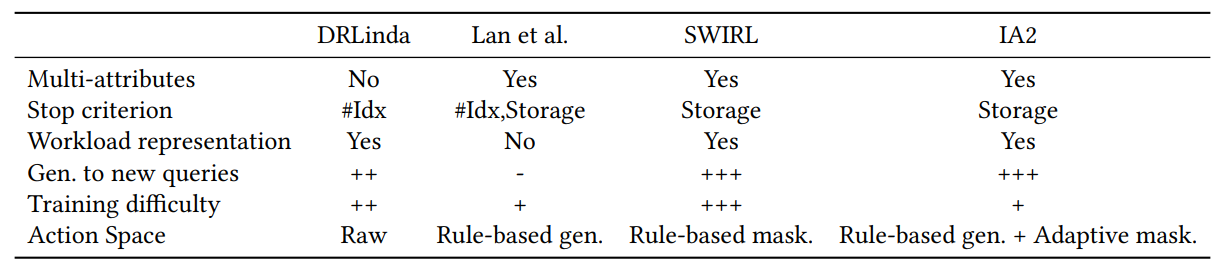

Related Works

2.1 Traditional Index Selection Approaches

2.2 RL-based Index Selection Approaches

-

Index Selection Problem

-

Methodology

4.1 Formulation of the DRL Problem

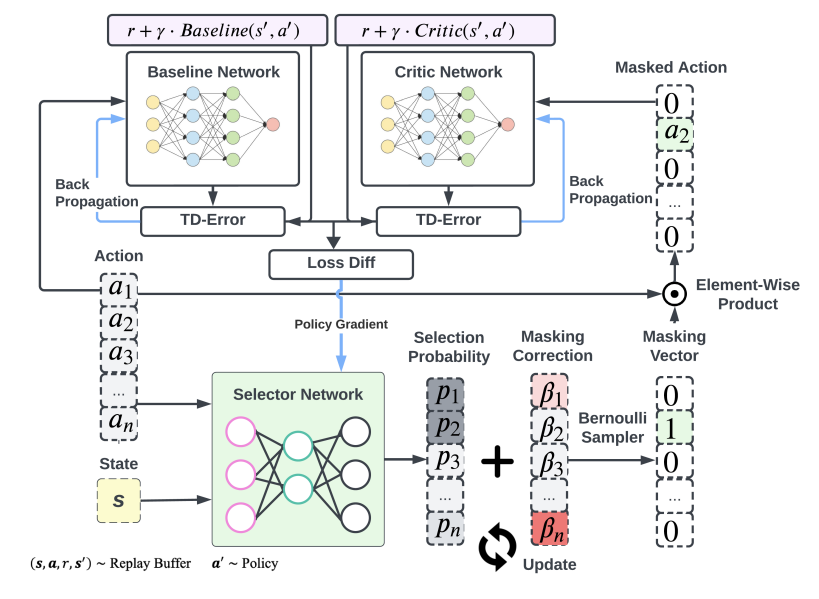

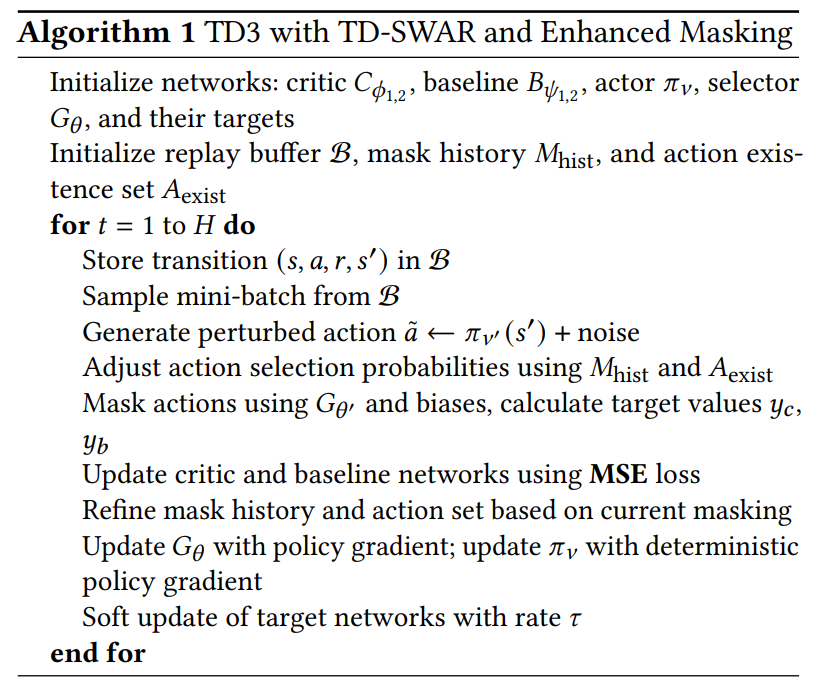

4.2 Instance-Aware Deep Reinforcement Learning for Efficient Index Selection

-

System Framework of IA2

5.1 Preprocessing Phase

5.2 RL Training and Application Phase

-

Experiments

6.1 Experimental Setting

6.2 Experimental Results

6.3 End-to-End Performance Comparison

6.4 Key Insights

-

Conclusion and Future Work, and References

5 System Framework of IA2

As shown in Figure 3, IA2 operates through a structured two-phase approach, leveraging deep reinforcement learning to optimize index selection for both trained workloads and unseen scenarios. It depicts IA2’s workflow, where the user’s input workload is processed to generate states and action pools for downstream RL agents. These agents then make sequential decisions on index additions, adhering to budget

\

\

\

\ constraints, demonstrating IA2’s methodical approach to enhancing database performance through intelligent index selection.

\

:::info Authors:

(1) Taiyi Wang, University of Cambridge, Cambridge, United Kingdom (Taiyi.Wang@cl.cam.ac.uk);

(2) Eiko Yoneki, University of Cambridge, Cambridge, United Kingdom (eiko.yoneki@cl.cam.ac.uk).

:::

:::info This paper is available on arxiv under CC BY-NC-SA 4.0 Deed (Attribution-Noncommercial-Sharelike 4.0 International) license.

:::

\

You May Also Like

Who is Juno Sauler, Gilas Pilipinas Youth’s new head coach?

US federal prosecutors open inquiry into Fed Chair Powell